Identification of Topics and Their Evolution in Management Science: Replicating and Extending an Expert Analysis Using Semi-Automated Methods

(This article is a digital replication of a thesis submitted to the Faculty of Graduate and Postdoctoral Affairs in partial fulfillment of the requirements for the degree of Master of Applied Science In Technology Innovation Management Carleton University Ottawa, Ontario. A PDF version is available here.)

Abstract: Latent Dirichlet allocation (LDA) is a popular generative probabilistic model that enables researchers to analyze large semantic datasets; however, few open-source software tools with Graphical User Interfaces (GUIs) are available to researchers. This study identifies an open-source software tool that, in conjunction with a popular electronic spreadsheet software application, can be used to perform topic modeling. A process is developed and evaluated against a pre-existing expert review that examines work published in Management Science on the topics of technological innovation, product development, and entrepreneurship between 1954 and 2004 (Shane and Ulrich, 2004). The process is then replicated using an expanded corpus that includes all articles published in Management Science between 2005 and 2015. The discussion includes an analysis of the process and insights generated by using topic modeling. A replicable process for researchers and suggestions for practitioners are provided.

Introduction

As part of the 50th anniversary celebrations for Management Science, a review of all articles related to technological innovation, product development, and entrepreneurship that had been published between 1954 and 2004 was conducted by then-editors Shane and Ulrich. This expert review, published in 2004, helped identify 12 themes and their evolution during the period. The results provided insights for researchers in terms of understanding what questions have been addressed in Management Science in the area of innovation and how knowledge developed over a half-century (Shane and Ulrich, 2004).

In the years since, a number of semi-automated methods have evolved that allow researchers to perform similar analytical tasks in a shorter period of time. In particular, the algorithm proposed by Blei al. (2003) – Latent Dirichlet Allocation (LDA) – is a popular topic modeling technique; however, its use outside of computer science remains infrequent, possibly due to the lack of a Graphical User Interface (GUI) on most topic modeling tools. Research comparing semi-automated methods to human-generated results in pre-planned experiments exists; however, few studies compare the results of topic modeling to an expert review that was completed before the semi-automated methods gained popularity outside of computer science and none have done so using topic modeling software with a GUI.

In this study, we review the work performed by Shane and Ulrich (2004) and reproduce it using the selected semi-automated method (topic modeling). A process is developed using a relatively new open-source topic modeling tool (Orange) and the similarities and differences in the output between the expert review and the topic modeling tool are documented. The new process is then used to review an additional ten years of articles published in Management Science (2005-2015). Researchers interested in the use of semi-automated methods – as well as those interested in trends that present themselves in Management Science – would benefit from reviewing this work.

Objective

The objective of this research is to replicate and extend an expert review using select semi-automated methods. Specifically, the objective was to develop a replicable, semi-automated process for topic modeling using open-source software and identify how the results of this process compare to an expert review. This process would then be applied to a new corpus.

Deliverables

This thesis has four (4) deliverables:

⦁ New insights into the evolution of topics in the Management Science journal within both the original corpus (1954-2004) and a new corpus (2005-2015).

⦁ A comparison of how the results of the semi-automated methods were similar and/or different from the results produced by expert editors.

⦁ Recommendations for research practice, including instructions for other researchers to replicate semi-automated expert reviews using the selected software.

⦁ Recommendations for improvements to the selected open-source software, to expedite the analysis process.

Contribution

This research makes two types of contributions, contributions to the general body of scholarship and contributions to practice.

Contributions to scholarship

This research contributes to scholarly knowledge by:

⦁ Identifying advantages and limitations of using selected semi-automated methods and topic modeling tools, as compared to the baseline of a manual expert review.

⦁ Providing insights about the evolution of topics within a pre-existing corpus from Management Science (Shane and Ulrich, 2004) as well as within an expanded corpus from the same journal.

Contribution to practice

This research contributes towards practice by:

⦁ Creating instructions for the use of selected tool(s) for achieving specific topic modeling objectives for other researchers.

⦁ Identifying manual steps that could be eliminated by the software tool developers.

Relevance

The deliverables of this research will be of relevance to the following groups: (1) Researchers, (2) Executives and Top Management Teams, and (3) Practitioners & Software Developers.

First, researchers that have access to large semantic data sets will be interested in reviewing this work. As large electronic document archives become readily available online and widely accessed by diverse communities, new tools for automatically organizing, searching, indexing and browsing large collections are required (Blei & Lafferty, 2006; 2007). Further, an understanding of the similarities and differences between the results generated by manual and semi-automated methods, along with the open-source tools available to perform similar tasks will assist researchers in determining if these tools are suitable for reviewing their semantic datasets.

Second, the time available for an individual to collect, read, interpret, and act is limited in both corporate and research environments (Uys, Schutte & Van Zyl, 2011). Businesses may have large corpora that the process and tools could be used to analyze (e.g. market analysis research). Executives and top management teams will be interested in both (a) the ability to analyze large corpora using these tools, as well as (b) the additional insights generated regarding the content of Management Science using semi-automated methods within both the original corpus as well as an expanded 10-year period.

Third, practitioners will benefit through understanding how current topic modeling tools are used, while software developers will benefit from understanding where there are unnecessary manual steps that can be removed.

Organization of the document

This research is organized into seven (7) chapters, each with subsections. The literature review (Chapter 2) provides insight regarding current methods described in current literature. The research design and method section (Chapter 3) outlines the actions required to produce the deliverables. Chapter 4 details the results generated by reviewing Corpus A and outlines the proposed topic modeling process, which is validated in the discussion section. These results validated in Chapter 5 when it is applied to a larger corpus. Chapter 6 provides an analysis of the results of this research. Chapter 7 concludes the study identifying research limitations and suggestions for future research.

Literature Review

To inform and guide this literature review, we examined the objectives outlined in Technological Innovation, Product Development, and Entrepreneurship in Management Science (Shane and Ulrich, 2004):

First, we hope that it will be useful to doctoral students and researchers interested in understanding what questions have been addressed in Management Science in the area of innovation. Second, we hope that the article will be useful to sociologists of science who are interested in understanding how knowledge develops in a field (p. 33).

These goals can be summarized as (1) identifying pre-existing topics on a given subject within a journal (i.e., “what questions have been addressed”), and (2) identifying how these topics have evolved (i.e., “how knowledge develops in a field”).

It was determined that a literature review should include information on semi-automated methods (specifically, topic modeling) and a summary of similar studies that use topic modeling to examine academic journals. This literature review provides a baseline of knowledge for reviewing the two datasets and generating labels for the topics in the topic models.

Topic Modeling

In domains such as sociology, there are three main ways to analyze texts: (1) virtuoso interpretations based on insights the readings produce, (2) produce a set of themes (based on research questions theoretical priors, or perusal of a subset of texts) and generate a coding sheet, then code the texts by reading them, or (3) search texts for keywords (based on research questions or theoretical priors) and comparing subsets of texts with respect to the prevalence of those keywords (DiMaggio, Nag, & Blei, 2013). These approaches require the researcher to generate meaning early in the review process. Further, it has been inferred that human coding of documents could be biased by properties of the documents themselves such as form, organization, and style (Radar & Wash, 2015).

DiMaggio et al. (2013) argue that a sound approach to text analysis must satisfy four conditions: explicit (for reproducibility, testing interpretations), automated (to accommodate the large volumes of text available), inductive (to permit the researcher to discover the structure of the corpus before imposing their priors on the analysis), and it must recognize the relationality of meaning by treating terms as varying in meaning across different contexts. It is their position that topic modeling satisfies each of these conditions (DiMaggio et al., 2013).

Topic modeling algorithms are a suite of machine learning methods that facilitate the unveiling of hidden thematic structures from large textual collections (Blei, Ng, and Jordan, 2003; Chang, 2016; DiMaggio et al., 2013; Song and Ding, 2014). Topic models are based upon the idea that documents are mixtures of topics, where a topic is a probability distribution over words (Blei et al., 2003; Griffiths & Steyvers, 2002; 2003; 2004; Hofmann, 1999; 2001). A strength of topic modeling is its ability to capture polysemy by allowing a word to belong to different topics; the disambiguation of different uses of a term, based on the context in which it appears, allows for the same term to appear within different topics (Steyvers & Griffiths, 2007; DiMaggio et al., 2015). The emphasis on relationality (the belief that meanings emerge out of topics) is shared by both linguists and cultural sociologists: topics may be viewed as frames (“semantic contexts that prime particular associations or interpretations of a phenomenon in a reader”) or lenses for viewing a corpus of documents (DiMaggio et al., 2013).

There are several known limitations to topic modeling. These include the requirement for the researcher to make a series of judgements around choosing stop words and the number of topics produced. The decisions made by a researcher related to these points will impact the results. Additionally, large and complex datasets can consume a considerable amount of computer memory and require extensive processing time; however, each of these issues can be partially mitigated through careful structuring of the experiments and selection of topic modeling tools.

Topic models originated with latent semantic indexing (LSI), but that method is not considered to be an authentic topic model as it is not a probabilistic model.

Probabilistic latent semantic analysis (PLSA) was based on LSI (Hoffman, 2001). An extension of PLSA is Latent Dirichlet Allocation (LDA). While there are a growing number of probabilistic models that are based on LDA, the remainder of this section will focus on LDA.

Latent Dirichlet Allocation (LDA)

Proposed by Blei et al. (2003), LDA is “a 3-level hierarchical Bayesian model, in which each item of a collection is modeled as a finite mixture over an underlying set of topics. Each topic is, in turn, modeled as an infinite mixture over an underlying set of topic probabilities” (p. 994). It is a “bag of words” technique, whereby words are considered to be independent (i.e. word order is not relevant); however, the algorithm looks at the frequencies and co-occurrence of words within the document and in common across documents (Neuhaus & Zimmerman, 2010; Rader & Wash, 2015).

LDA makes some assumptions, including: each document delineates different proportions of the topics and that each topic can be summarized by a set of words (Blei, 2012; Radar & Wash, 2015). Put another way, the words in each document are all related to the underlying topics within that document (Radar, 2015); this assumption that documents exhibit multiple topics is particularly useful for addressing heteroglossia, or the copresense of competing “voices” (perspectives / styles of expression) within a single text (Blei, 2012; DiMaggio et al., 2013). LDA uses contextual clues to group related words and distinguish between uses of ambiguous words (polysemy) (Blei et al., 2003; Chang, 2016) and has been identified as catering for synonymy (Griffiths, Steyvers, & Tenenbaum, 2007). As with other topic models, an unsolved problem is that users must pre-specify the number of topics to identify, as the number of topics is assumed to be a known, fixed number (Neuhaus & Zimmerman, 2010; Rader & Wash, 2015). It is also important to note that LDA does not produce a definitive categorization for what each document is “about” or a quality assessment of the information within each document (Radar & Wash, 2015).

LDA has been used by researchers in a variety of fields, including history, political science, public policy, sociology, science and technology studies (Choi, Lee, & Sohn, 2017; DiMaggio et al., 2013; Jockers & Mimno, 2013; Koltsova & Koltcov, 2013; Rader & Wash, 2015). While the use of structured data is common,practitioners in emerging fields such as cybersecurity have used LDA to process unstructured data such as interpersonal stories, news articles, and web pages (Rader & Wash, 2015). More recently,it has been used

to examine conversations on Twitter (Alvarez-Melis & Saveski, 2016).LDA has been used to identify trends in journals as diverse as Computers and Industrial Engineering(Uys, Shutte, & Van Zyl, 2011) and the Journal of Economic History (Wehrheim, 2017).There are many multi-journal reviews using topic modeling, including: a review of the field of development studies using 26,685 articles from 30 journals with 15 years of data (Thelwall & Thelwall, 2016), a review of physics using 3,448 articles across five journals (Peskin & Dima, 2017), and a corpus of scientific abstracts containing 5,225 abstracts with 28,414 unique terms (Blei et al., 2003).

Topic Modeling Tools

There are several open-source software tools that have topic modeling as part of their functionality. These have previously been identified by other authors, including Amin (2016) and Tapelova (2017). The combined and redacted summary of these tools are as follows:

| Package | Developer | Features |

|---|---|---|

| MALLET | McCallum (2002) | Implements LDA using Gibbs Sampling |

| LDA-C | Blei (2003) | Implements LDA with Variational Sampling. |

| Matlab Topic Modeling Toolbox | Steyvers (2005) | Implements LDA with Gibbs Sampling. |

| Stanford Topic Modeling Toolbox (TMT) | Ramage (2001) | Implements LDA. Allows visualization of topics in Excel. No longer supported by original authors. |

| GENSIM | Rehurik & Sojka (2010) | Implements LSA, PLSA, and LDA using Gibbs sampling. |

| R package topic models | Hornik & Grun (2011) | Works well with other R packages for NLP such as tm and textmineR. Built in R, an r wrapper around LDA-C by Blei (2003). |

| R package LDA | Chang (2015) | Implements LDA using Collapsed Gibbs Sampling. Implements other LDA topic models such as Supervised LDA, Correlated LDA, and Relational LDA. Works well with other R packages for NLP such as tm and textmineR. |

Heuristics for Evaluating Topic Models

The selection of an appropriate topic model involves a variety of trade-offs and judgments by the human researcher (Evans, 2014); the selection of the model that is the best fit for the specific research question requires both qualitative and quantitative validation techniques (Griffiths et al., 2007). As noted above, one of the limitations of topic modeling is the requirement for the researcher to select the number of topics. Heuristics used to evaluate topic models and determine the best fit range from the use of statistical modeling through to manual Labeling of each topic, depending on the research question being posed. It is important to note that the commonly-used adage in statistics remains true: “all models are wrong, but some are useful” (Box & Draper, 1987: 424). The following is a summary of some of the heuristics discussed in the literature:

Compute metrics (log-likelihood, perplexity)

Metrics such as log-likelihood and perplexity have been proposed to assess the quality of a topic model and determine the best number of topics (Amin, 2016; Tapilova, 2017); however, it is important to note that these heuristics are often advocated by software engineers who are comfortable with programming languages. These metrics do not necessarily agree with human assessments; Neuhaus and Zimmerman (2010) observed that domain experts judged the best number of topics lower than what the max log-likelihood metric suggested. Further, computing these metrics is only available programmatically (using R or Python-based tools), not with more user-friendly tools.

Determine Overall Importance

Multiple authors suggest it is critical to determine the overall importance of topics within a model (Mathew et al., 2016; Neuhaus & Zimmerman, 2010). Mathew et al. (2016) suggest selecting the topics that explain 90% of the papers (i.e., excluding topics that are not included in the 90% threshold) based on the expectation that topics with higher weight are more straightforward to name since they have more supporting documents. Similarly, the order of topics and words within topics is important (Mathew et al., 2016). In practice, when reviewing a model this would mean the topics should be ordered top-to-bottom, most-to-least frequent and words within topics should be ordered left-to-right, most-to-least frequent. This helps ensure the researcher is reviewing those topics and words with the highest overall importance prior to those with lower overall importance.

Evaluate Coherence

The evaluation of topics within a model for coherence is another heuristic that may be used. In his talk, Mimno (2012) discusses how a researcher can examine how the words inside a topic relate to each other; specifically, he makes several observations about coherence including the identification of both topic and word intruders. This approach is reflected in the article Reading Tea Leaves: How Humans Interpret Topic Models, whereby the authors devised two human evaluation tasks to explicitly evaluate both the quality of the topics inferred by the model and how well the model assigns topics to documents:

⦁ The first, word intrusion, measures how semantically “cohesive” the topics inferred by a model are and tests whether topics correspond to natural groupings for humans.

⦁ The second, topic intrusion, measures how well a topic model’s decomposition of a document as a mixture of topics agrees with human associations of topics with a document (Chang, Gerrish, Wang, Boyd-graber, & Blei, 2009: 2).

As noted by Neuhaus and Zimmerman (2010), as the number of topics increases the topics will become more fragmented (less cohesive). This suggests that topics should be merged. Further, Neuhaus and Zimmerman (2010) suggest that an indicator that topics are not well separated is that there is a large overlap in the set of words that appear in different topics and that these topics should be combined.

Labeling Topics (Headwords, Word Clouds)

Topic labels are a means by which it is easier to refer to the topics than the automatically-generated labels:

Assigning labels to topic clusters is a subjective process. The labels I have assigned here are most frequently derived from the topic headwords. Some may find the labels unhelpful or even controversial. […] By default the modeling process assigns topics a number (e.g. topic 1, topic 2, etc.). While referring to topics by number is certainly less controversial, it’s not a very useful way to talk about them. These labels should be read as “general terms of convenience” and not as definitive statements on the ultimate meaning of the word cluster (Jockers, 2013, para 2).

Other authors suggest that it is valid to use the top terms to label the topics, as “there is very little information associated with the latter words. Hence, the evidence to hand suggests that generated labels from the first few terms [are] valid” (Mathew et al., 2016: 6). Examples from their work include:

⦁ Program analysis: program, analysis, dynamic, execution, code, java, static

⦁ Source code: code, source, information, tool, program, developers, patterns

⦁ Developer: developer, project, bug, work, open, team, tools

In short, topics with lower weights may not have many supporting documents and therefore the keywords may become more arbitrary. If it becomes difficult to generate a topic label, this can be an indicator there are too many topics.

Finally, as LDA can allocate the same term to multiple topics (due to polysemy), visualization of the terms can assist researchers in determining appropriate topic labels. Topic word clouds have been used as a heuristic by several authors, including Jockers (2013).

Summary

In summary, LDA is popular, and is used in a variety of research areas for examining large semantic datasets to identify latent topics. It is particularly useful for eliminating bias of manual coding. The inputs to the topic models are critical – the decisions around choosing stop words, the number of topics produced, and the scope of the corpus will influence the final results, introducing researcher bias.Different heuristics are available to researchers to help them identify the correct topic model for their situation.These are a mixture of qualitative and quantitative approaches, including: log-likelihood, perplexity, overall average topic weights, coherence, headwords and reviewing word clouds. Topic models are a lens for viewing the corpus; selection should be based on whether substantively meaningful and analytically useful topics are identified.

Research Design

Approach

Text analysis methods can be divided into two groups: deductive methods that are based on a pre-defined codebook with a set of relevant categories, and inductive methods that share an explorative character aiming to identify certain attributes of the text content (Gunther & Quandt, 2016). An inductive approach is helpful for generating initial information regarding the text corpus when researchers have little prior knowledge about its content, offering a way to subset the data by identifying relevant documents for a following (manual) in-depth analysis and generally reducing the manual workload (Gunther & Quandt, 2016). This research uses and inductive approach as it is explorative, aiming to identify certain attributes of the text content by a non-expert (i.e., a graduate student).

Table 3-1 identifies the steps carried out in this research. The subsections detail the steps taken to generate the results and deliverables of this research. These steps are adapted from Amin (2016) and Tapelova (2017); however, modifications have been made for clarity and to account for (a) multiple corpora and (b) an analysis of the process and tools.

| Step | Description | Activity |

|---|---|---|

| i | Literature Review | Identify and define key characteristics of topic modeling that will allow the researcher to identify topic modeling tools and heuristics. |

| ii | Select Topic Modeling Tools | Selection of tools and methods suitable for replicating and extending the expert topic review based on the literature review. |

| iii | Acquire Data | Obtain copies of Management Science articles published from 1954-2004 previously identified by Shane and Ulrich’s review (2004) (“Corpus A”) and extract titles, abstracts and publication dates. |

| iv | Preprocess & Process Data | Preprocessing data (stop words, normalization) and create models for different number of topics using selected implementation of topic modeling algorithm (LDA in Orange) |

| v | Select & Interpret Topic Model | Evaluate the models based on selected heuristics. Identify the model with the optimal number of topics for this study. Begin interpretation of model through Labeling of topics. |

| vi | Analyze the Model | Generate charts detailing the number of publications published per year per topic, as well as the distribution of topics over time. Review topic evolution and discuss interesting trends. |

| vii | Compare Results | Compare results of final categorization and tables to Shane and Ulrich (2004). |

| viii | Extend Analysis | Apply the process (steps iii-vi) to a larger corpus (“Corpus B”: all articles published in Management Science from 2005-2015). Demonstrate how the process works on a larger corpus that could not be processed manually. |

| ix | Summarize Process | Generate summary of replicable process for generating topic models based on insights generated. |

Research Design

Unit of Analysis & Study Period

The unit of analysis for this study is two corpora, both generated from articles published in the Management Science journal. These consist of:

⦁ Selected article and title abstracts from an expert review. The articles – which had been identified by Shane and Ulrich (2004) as being related to the topics of technological innovation, product development, and entrepreneurship – span a period from 1954 to 2004. This will be identified as “Corpus A.”

⦁ All article titles and abstracts published spanning a ten-year period from 2005 to 2015. This will be identified as “Corpus B.”

Only using article titles and abstracts in relation to academic articles (rather than the entire text of the papers) when generating a topic model simplifies data collection. Mathew et al. (2016) summarize their reasons for using a similar dataset as follows:

(a) Titles and abstracts are designed to index and summarize papers; (b) Obtaining papers is a huge challenge due to copyright violations and its limited open source access; (c) Papers contain too much text which makes it harder to summarize the content. Abstracts on the other hand are much more succinct and generate better topics (p. 5).

Shane and Ulrich (2004) also used titles and abstracts to help narrow the articles for review in their initial data preprocessing steps; as such, using the titles and abstracts ensures this process is similar to that of the selected expert review.

Sample Size

The sample size for the two corpora are as follows:

⦁ Corpus A: The 248 articles identified by Shane and Ulrich (2004) published in Management Science between 1954-2004 that discuss technological innovation, product development, and entrepreneurship.

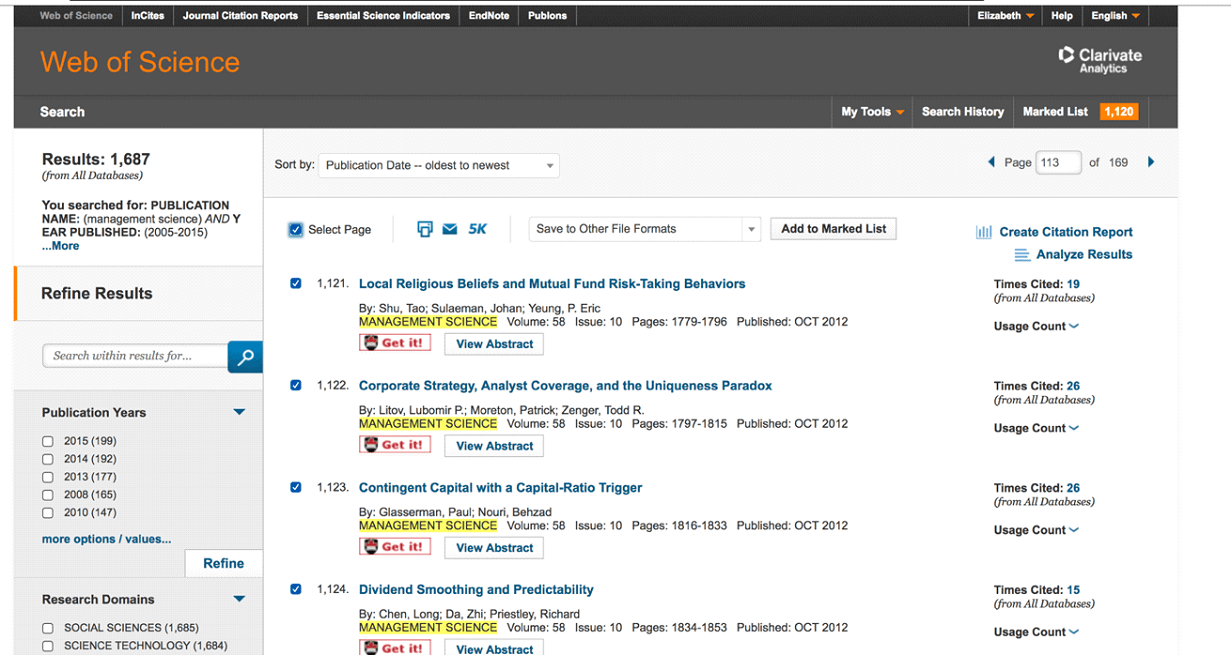

⦁ Corpus B: The 1625 articles published in the Management Science journal from 2005-2015 on all topics.

Summary

These two corpuses were selected as they will generate different information:

⦁ The results from the review of Corpus A will be compared against the expert review previously generated by Shane and Ulrich (2004), to determine the efficacy of the proposed process and tools.

⦁ Once the proposed process has been validated, it will be used to analyze Corpus B. The resulting topic model will extend Shane and Ulrich’s work as well as provide a point of comparison regarding the speed of the proposed process in analyzing a larger corpus.

Overview of Steps

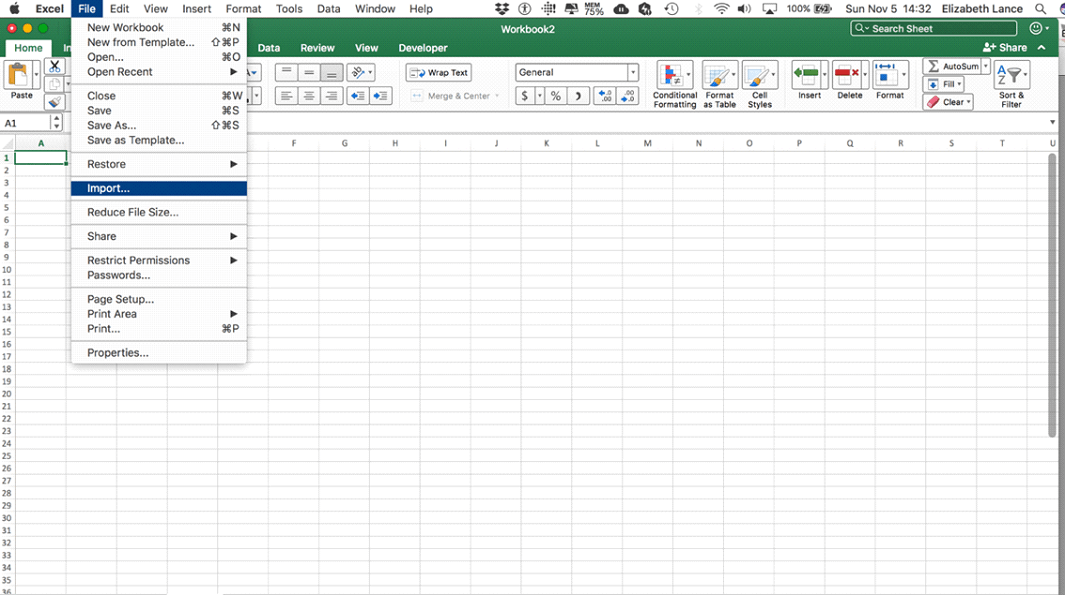

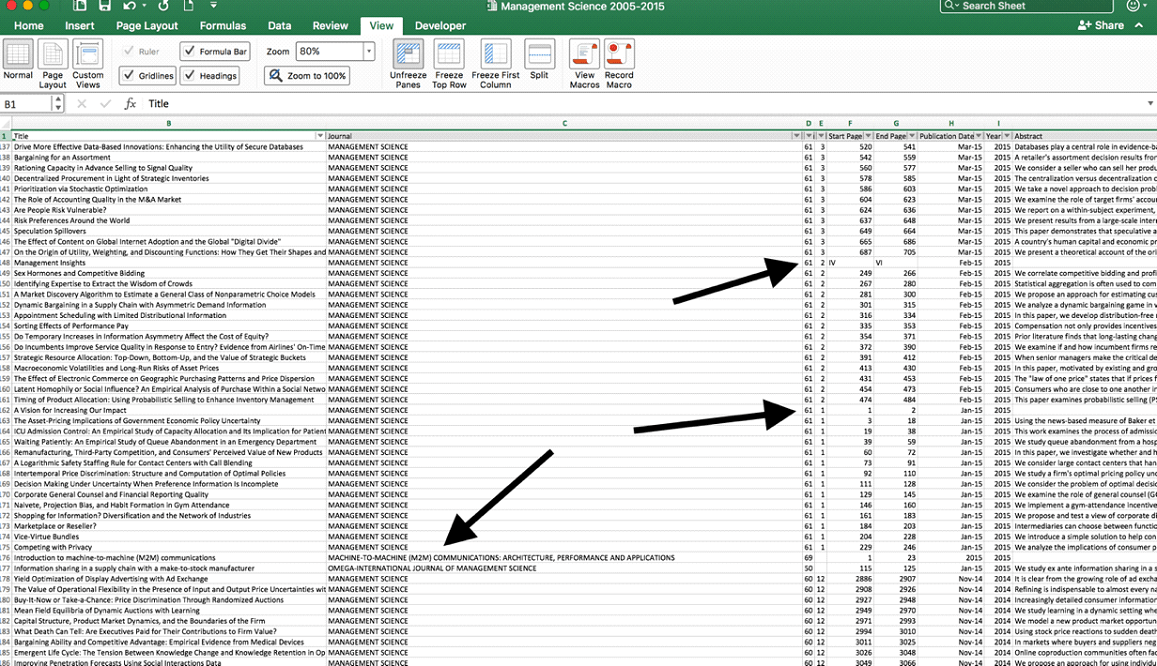

Data Acquisition

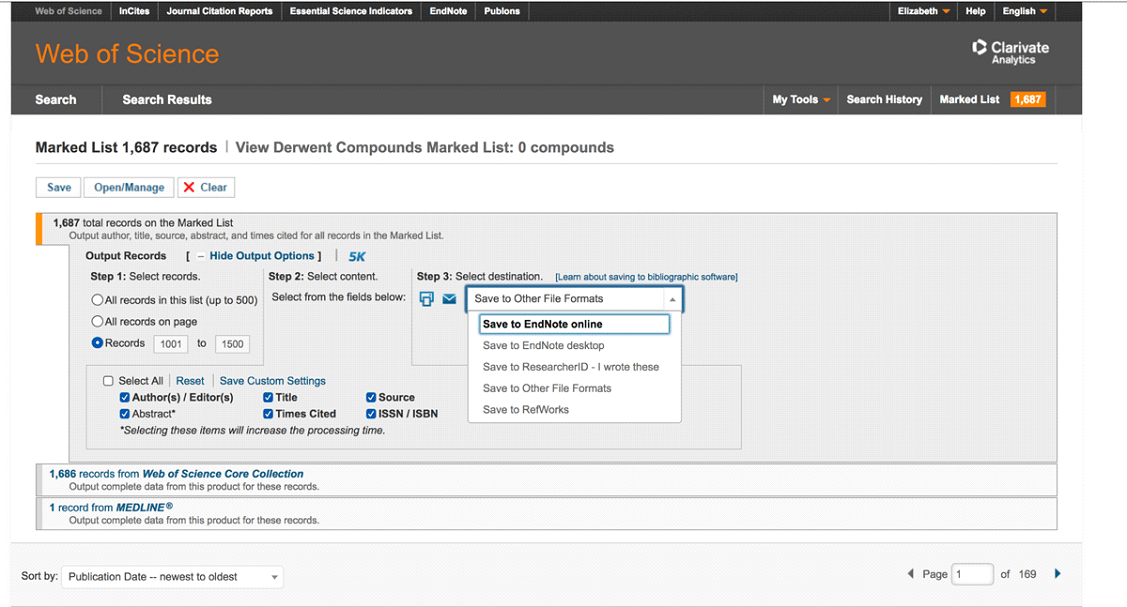

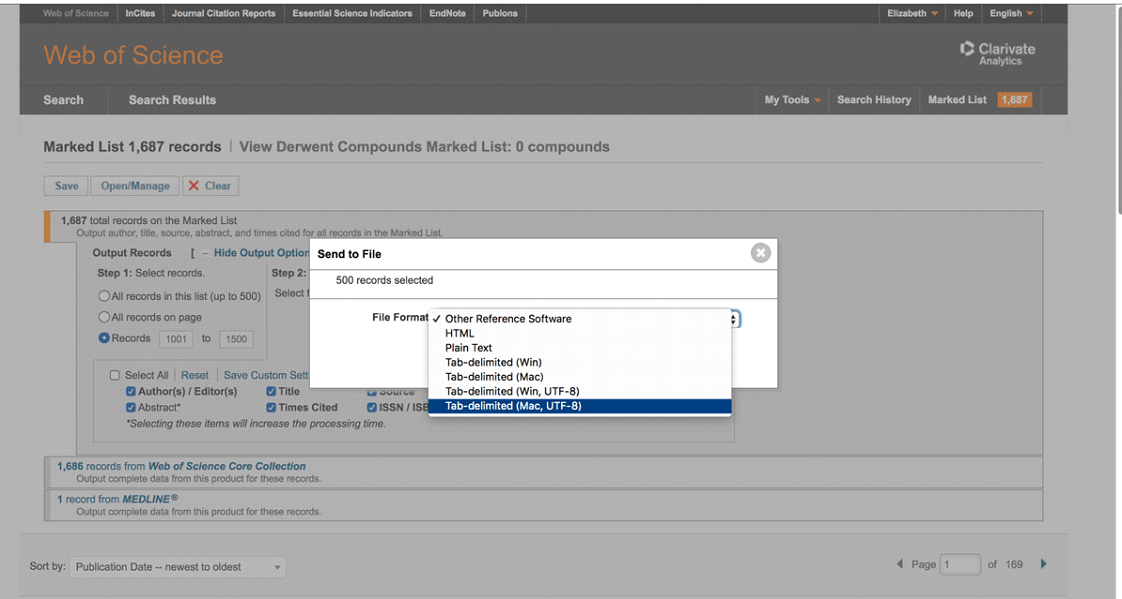

Copies of Management Science articles published from 1954-2004 previously identified by Shane and Ulrich’s review (2004) (“Corpus A”) were obtained, extracting relevant information (title, abstract, publication year). The Web of Science database was used to collect information regarding all articles published in Management Science from 2005-2015 (“Corpus B”).

Data Analysis

Select Topic Modeling Tools

Tools and methods suitable for replicating and extending the expert topic review were selected based on the literature review. This step also included installing different software applications prior to selecting a topic modeling tool.

Preprocess & Process Data

Identified and eliminated obvious errors in the data (“clean” the data), including addressing issues that arose during preprocessing. Preprocess data (remove stop words, normalization) and create models for different number of topics using selected implementation of topic modeling algorithm. For each model, export reports and spreadsheets that contain key information (topic IDs and distribution per article, top 10 topic words, word clouds, topic word distributions).

Select & Interpret Topic Model

Evaluate the models based on selected heuristics. Identify the model with the optimal number of topics and remove low-value topics as required. Using methods for Labeling topic models, begin interpreting topic model. Remove any additional low-weighted or incoherent topics.

Analyze the Model

Use topic models to generate charts detailing the number of publications published per year per topic, as well as the distribution of topics over time. Review topic evolution and discuss trends.

Compare Results

Compare results of final categorization and results to expert review previously published by Shane and Ulrich (2004). Identify areas of similarity and disparity, discuss results.

Extend Analysis (Corpus B)

Apply the process (steps iii-vi) to a larger corpus (“Corpus B”: all articles published in Management Science from 2005-2015). Demonstrate how the process works on a larger corpus that could not be processed manually.

Summarize Process

Based on the insights generated through the selection of the tools and the selected heuristics, generate a summary outlining the replicable process for generating topic models

Topic Modeling Tools

While the author had previous experience with the software program R and had identified multiple studies that utilized MALLET (Amin, 2016; Jockers & Mimno, 2013; Rader & Wash, 2015; Tapelova, 2017), both programs were deemed unsuitable either through the requirement that the user is familiar with command-line programming (R) or the requirement for the installation of additional software (ex. MALLET requires Python).

An open-source tool that has not been discussed in the literature in relation to topic modeling is Orange (https://orange.biolab.si/). Orange is a machine learning and data visualization tool with interactive data analysis workflows and a number of easily-installed add-ons that increase functionality. It has a simple GUI which allows individuals to use the tool without any programming knowledge. Any add-ons that are required (e.g. Textable, which includes topic modeling, word cloud, and text preprocessing sub-modules) to provide the required functionality are easily added from a panel within the program.

As the objective was to select software that could be used “out of the box” with minimal programming knowledge on the part of the user, Orange was selected for the generation of the topic models. It is expected the results obtained using Orange will be of similar quality to using MALLET from the command line or programmatically as the LDA components are, in fact, a wrapper around MALLET. For the analysis phase, Excel was used as a software license is available free of charge to all students at the university. Further, as a widely-used electronic spreadsheet tool in the private sector, any formulas required can easily be found using an online search. The selection of these two tools is intended to minimize the complexity and cost of the topic modeling tools.

Management Science (1954-2004)

Generate Topic Models

Data Acquisition

Each article from the corpus identified by Shane and Ulrich (2004) (“Corpus A”) was retrieved from the Web of Science database. Typos and duplicates were identified and removed from the original corpus, reducing the number of articles from 250 to 248. The authors name, article publication year, title, abstract, and keywords were collected and consolidated into a CSV file. Several older documents either (a) did not have abstracts in the Web of Science database, although abstracts had appeared in the journal articles, or (b) did not have an abstract. For the former, open-source OCR software was used to extract the abstracts. For the latter, the Shane and Ulrich (2004) description was used instead, as the first paragraph of the articles did not provide a relevant summary.

Data Preprocessing

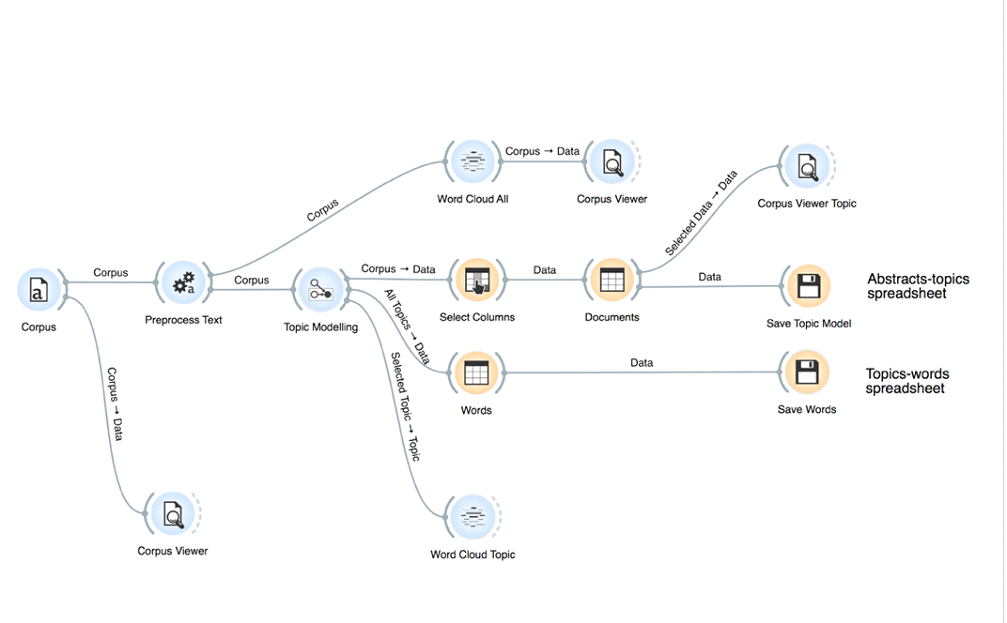

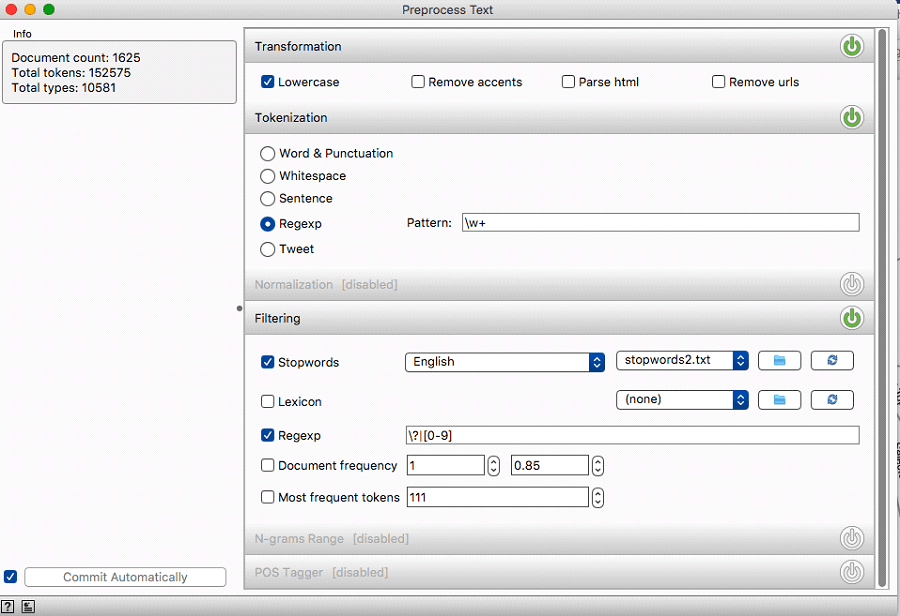

The selected topic modeling software (Orange) was downloaded and a workflow developed.

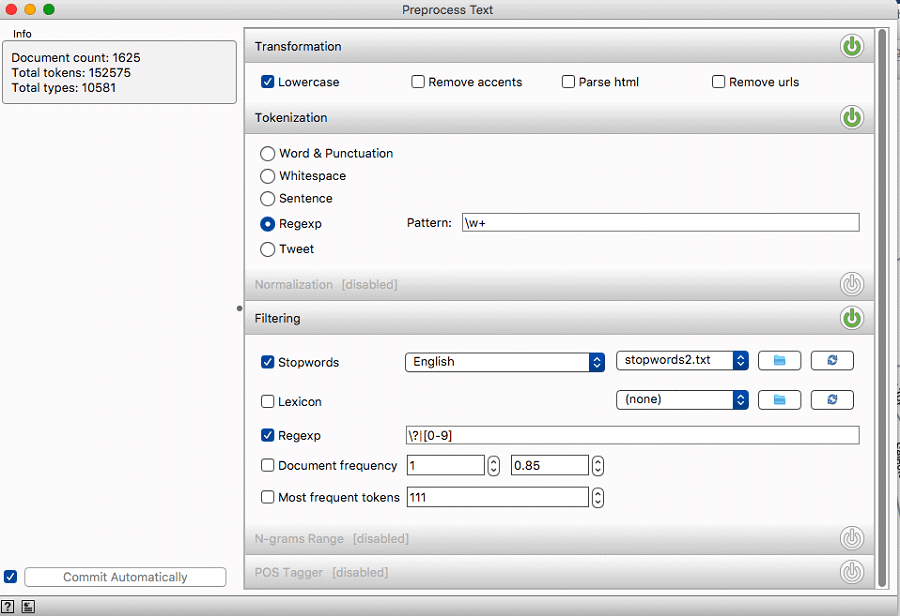

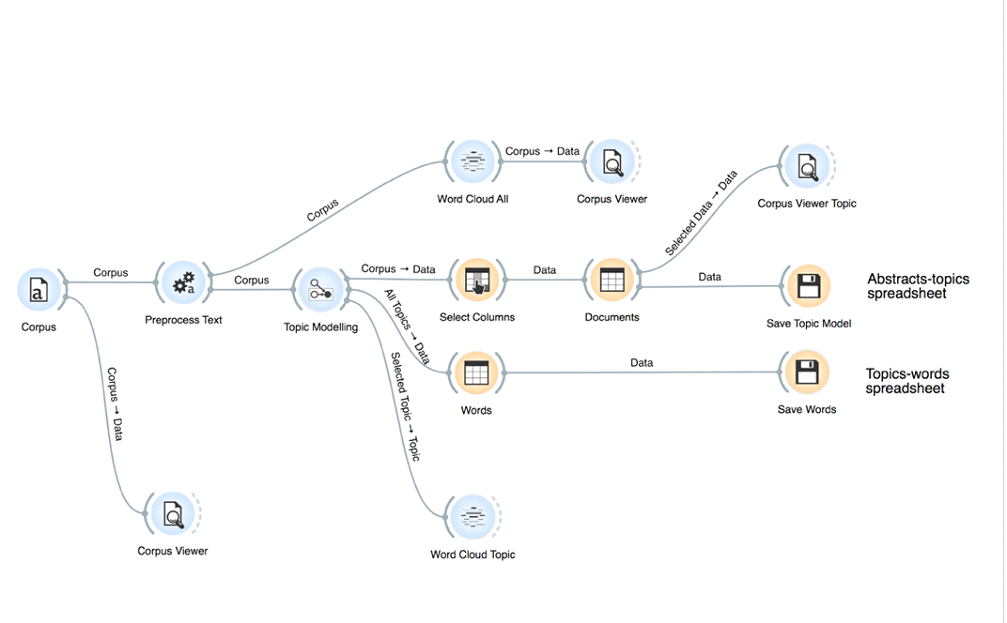

Figure 4-1 – Configuration of Preprocessing Step (Orange)

The initial stop word list was determined to be too short; a longer list was identified and downloaded (https://www.ranks.nl/stopwords) and words were added to that list. These included common terms found in academic articles (ex. article, paper, etc.), locations, and authors’ names. This is consistent with Jockers and Mimno (2013), who noted the importance of removing words that occur so frequently – and with such regularity in all documents – that they overwhelm topical variability.

Further manual preprocessing of the data was required as, during trial runs, it was discovered that the preprocessing step was removing critical key terms which contained punctuation (R&D and R, D & E). These terms were standardized so that they would not be affected by the removal of punctuation (randd and RDandE), and several unintentional errors were manually corrected (Linear & deterministic had become LineaRandDeterministic). Further, embedded references that contained names of other researchers were manually removed from the source file. These changes ensured that the input data to the topic model contained minimal noise.

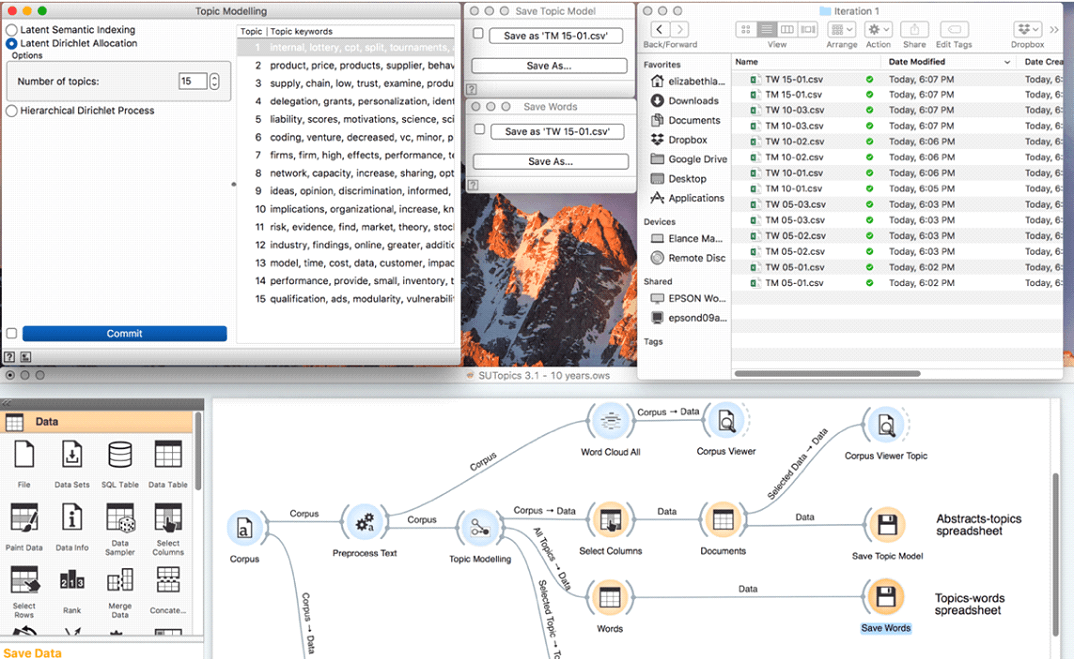

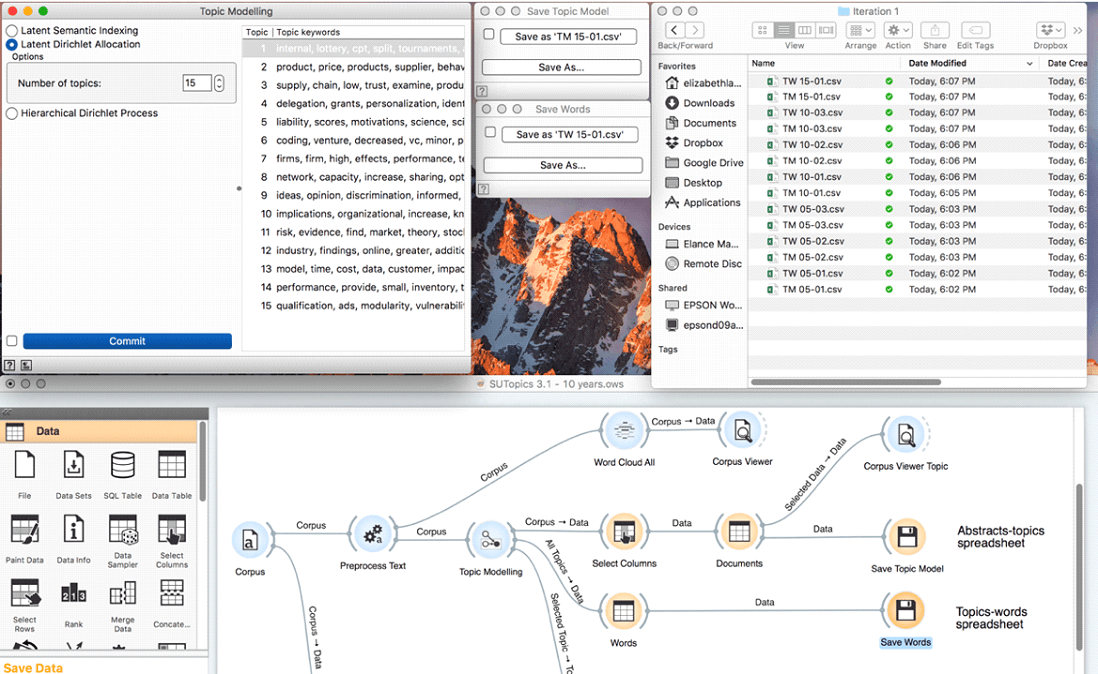

Generation of Topic Models

The following process was used to generate the topic models for review:

⦁ Generated models for topic models (5, 10, 15 … 40 topics). For each model:

⦁ Generated and saved word clouds for each topic in each model.

⦁ Saved outputs (Models, Words) as CSV files (Figure 4-3).

⦁ Saved top 10 keywords for each topic model as a report; exported report as PDF.

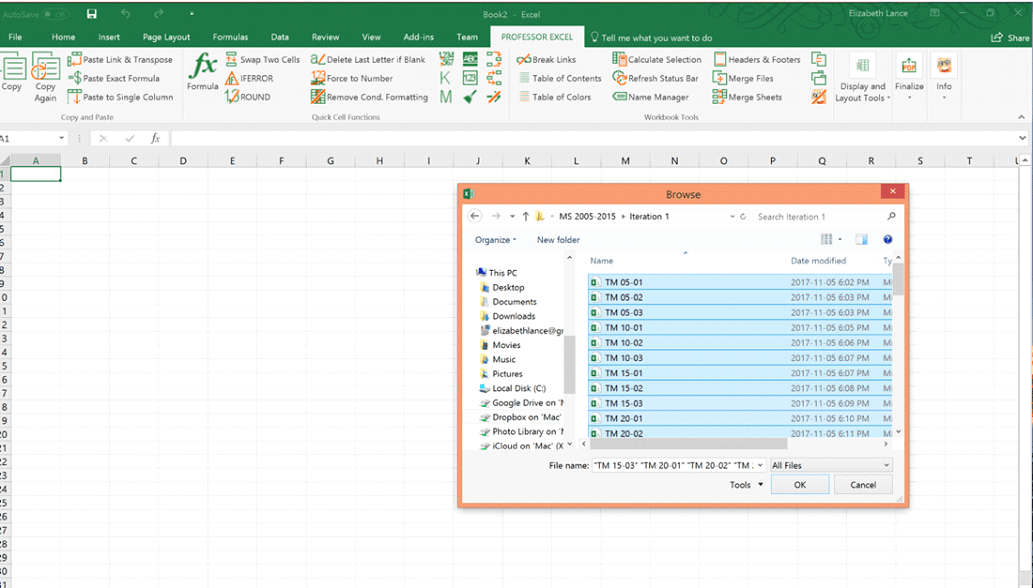

⦁ Combined all Model and Word CSV files into a single Excel file (Figure 4-4).

Figure 4-3 – Generating Topic Models (Orange)

Figure 4-4 – Combining Topic Models (Excel)

Selection of Optimal Topic Model

As noted in the literature review, there are several quantitative approaches that could be used to evaluate the topics and select a topic model; however, quantitatively significant models are not necessarily the most interpretable by humans (Chang et al., 2009). Further, computing those metrics is beyond the scope of the selected topic modeling software, Orange.

Since the purpose is to find the model with useful interpretation, the selection of the optimal model is inherently subjective as it is based on the researcher’s initial research questions and their ability to interpret the model. The author identified three key metrics that would help determine the model with the optimal number of topics:

⦁ Overall importance of topics;

⦁ Coherence of topic keywords; and

⦁ Identification of recurring topics (duplication of topic keywords) between models.

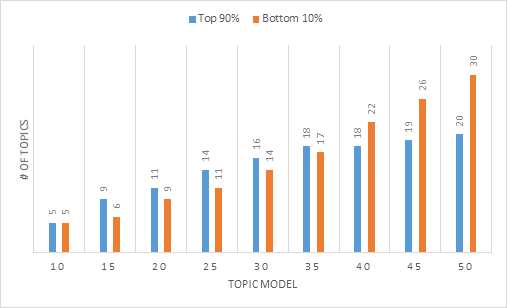

Overall Importance of Topics

The overall importance of the topics was determined and topics of low importance were removed by identifying the topics that explain 90% of the papers (i.e., the topics are sorted starting with the most probable topic and their probabilities until the 90% threshold of coverage is reached). This approach was used by Mathew et al. (2016), with the following rationale:

While our LDADE reported many more topics than these top 11, those occur at diminishingly low frequencies. [Other researchers] also report that 90% of the topics [in Software Engineering] can be approximated by about a dozen topics (p. 6).

This metric can help the reviewer narrow the scope of which topics to review and identify those topics that occur at higher frequencies. A secondary benefit of this approach is that the topics are now sorted by weight: topics with higher weight are often easier to interpret as they have more supporting documents (Mathew et al., 2016).

Steps: To calculate the overall importance of topics the following steps were conducted:

⦁ Determined the average weight of each topic model (using =AVERAGE function in Excel).

⦁ Sorted topics left-to-right for highest-to-lowest average weight.

⦁ On a new sheet (“Summary”), listed the top 10 keywords for each model, the topic model, topic number, topic weight (transposed from individual sheets).

⦁ Identified topics that covered 90% of papers for each model. Identified these in a separate column.

⦁ On a new sheet (“90%”), created a pivot table that identified the number of topics per model that represented the top 90% of topics.

⦁ On a new sheet (“Dashboard”), added tables to visualize the summarized results from the pivot tables.

Output: The following tables were generated:

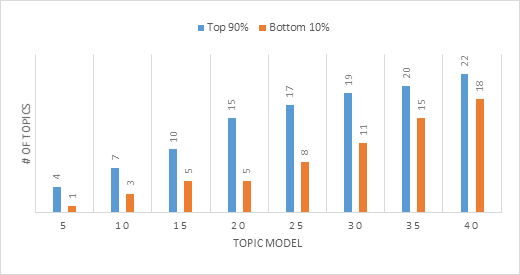

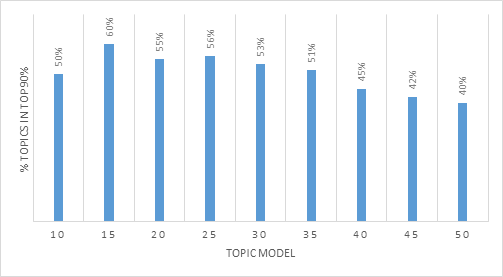

Figure 4-5 – 1954-2004 Importance of Topics (Count)

Figure 4-6 – 1954 -2004 Importance of Topics (Percentage)

Analysis: As the number of topics increases, the coverage decreases (i.e., a smaller % of the topics relative to the topics represents to top 90%). While this heuristic could suggest that Topic Model 5 or 20 has the best coverage (80% and 75%, respectively), there is a concern that if there are too few topics, the topics will be too general. As we explored the higher number of topics, some low weight topics gained a greater weight and were included in the 90%. Review of the above suggests that the number of topics stabilizes between 30 and 35; however, the number of topics should be determined by considering all identified heuristics.

Next Steps: Proceed to next heuristic. All the topics that represented less than 10% of the total topics were excluded from the next step of the review process.

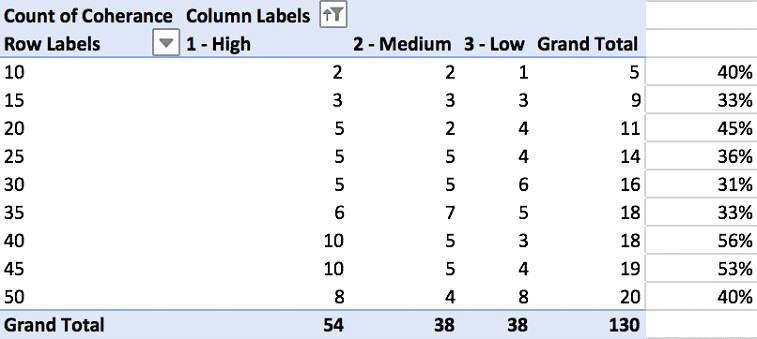

Coherence

Mathematically rigorous calculations of model fit (such as log likelihood and perplexity) do not always agree with human opinion about the quality of a model (Chang et al., 2009). While there is a newer formula that has been identified as possibly being able to correlate well with human judgement (‘C_v topic coherence’ in GENSIM), this formula relies on judging how often the topic words appear together in a corpus; however, the definition of ‘together’ remains subjective (Mimno, 2012).

While the headwords are those that are the most heavily weighted, another useful heuristic is reviewing overall coherence of the top 10 keywords identified. A manual review to evaluate the quality of the topics inferred by the model based on whether there was obvious word or topic intrusion can assist the reviewer in identifying the topic model with the greatest cohesion.

Steps: Coherence was determined by conducting the following steps:

⦁ On the “Summary” sheet, reviewed the keywords for the topics that are included in the top 90% of each topic model for coherence. In a new column, assigned a score of high, medium, or low cohesion in a separate column;

⦁ On a new sheet (“Coherence”), created a pivot table that identified the number of topics per model and counted the coherence labels of high, medium, or low cohesion; and

⦁ Generate charts to visualize the summarized results from the pivot table.

This identified the model with the highest number of cohesive terms, both in terms of those labeled as “high” as well as the combined score for “high” and “medium”.

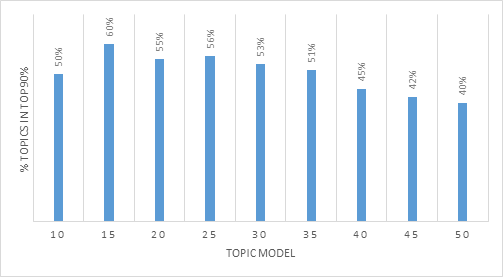

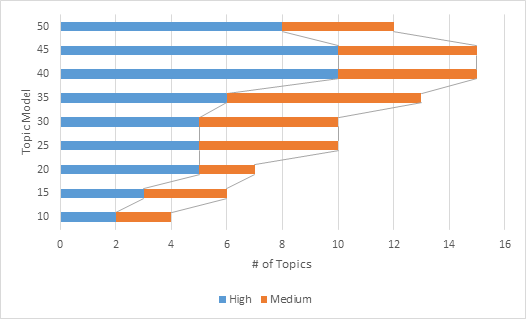

Output: The following tables were generated:

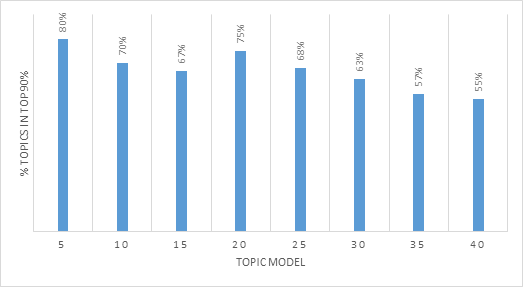

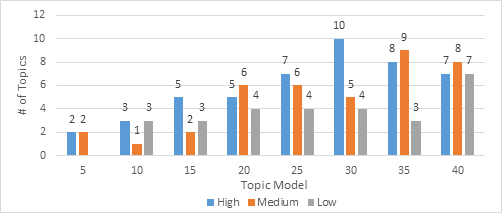

Figure 4-7 – 1954-2004 Topic Coherence (Bar Chart)

Figure 4-8 – 1954-2004 Topic Coherence (Stacked Bar Chart)

Analysis: In reviewing the above chart, Topic Model 35 appears to have the highest proportion of medium and high coherence topics; however, Topic Model 30 has the highest proportion of high coherence topics. There is a “peak” where model 30 has the most coherent topics; the topics need to be evaluated further to determine if this model is the correct fit.

Next Steps: Review topics to and identify recurring topics based on top 10 keywords.

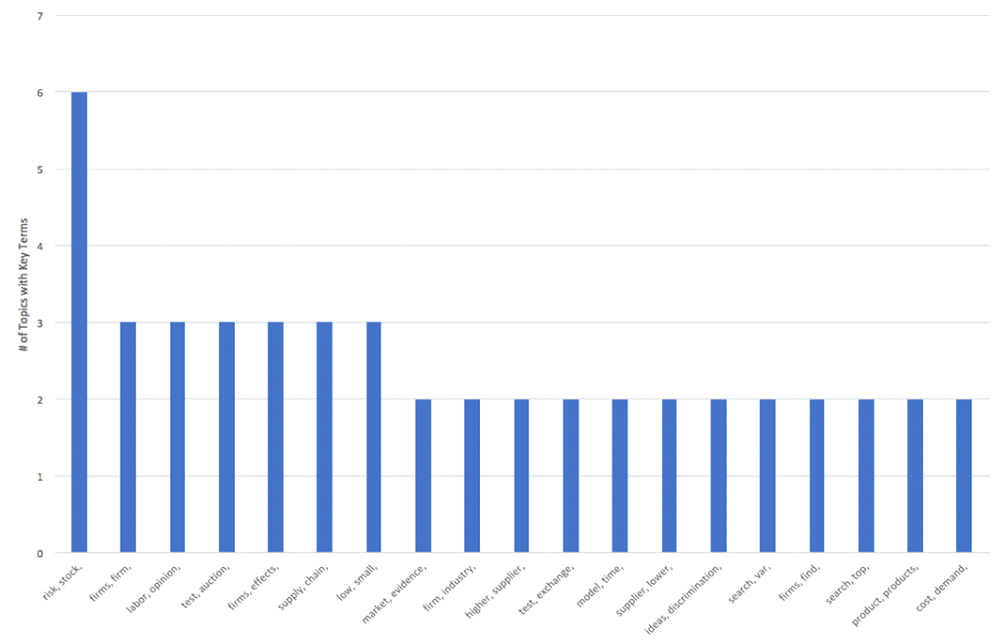

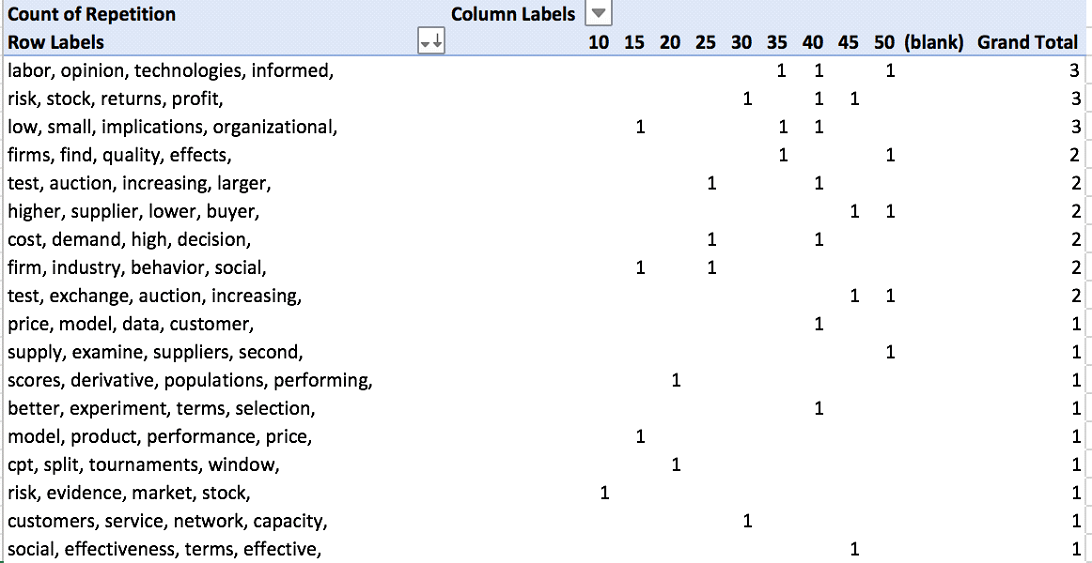

Recurring Topics / Keywords

The topic modeling software generates a list of the top 10 terms associated with each topic. If there are topics that appear repeatedly across multiple topic models, this would suggest that the topic is a relatively stable one.

Steps: To identify recurring topics, the following steps were performed:

⦁ Created formula in Excel to show only the first 2-4 words for each topic label and added a column where the number of words to include is identified:

=TRIM(LEFT(SUBSTITUTE(E2,” “,REPT(” “,1000),R2),1000)), where E2 is the cell containing text to be trimmed and R2 is the cell that identifies how many words to include.

⦁ Generated pivot tables and charts to identify # of identical topics for 3, 4, and 5 words; and

⦁ Generated pivot table and chart to identify model containing most stable topics.

Output: The following tables were generated:

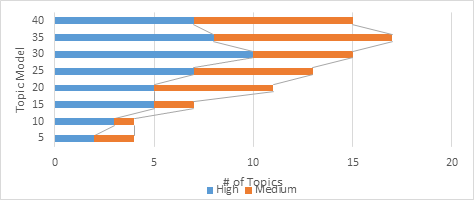

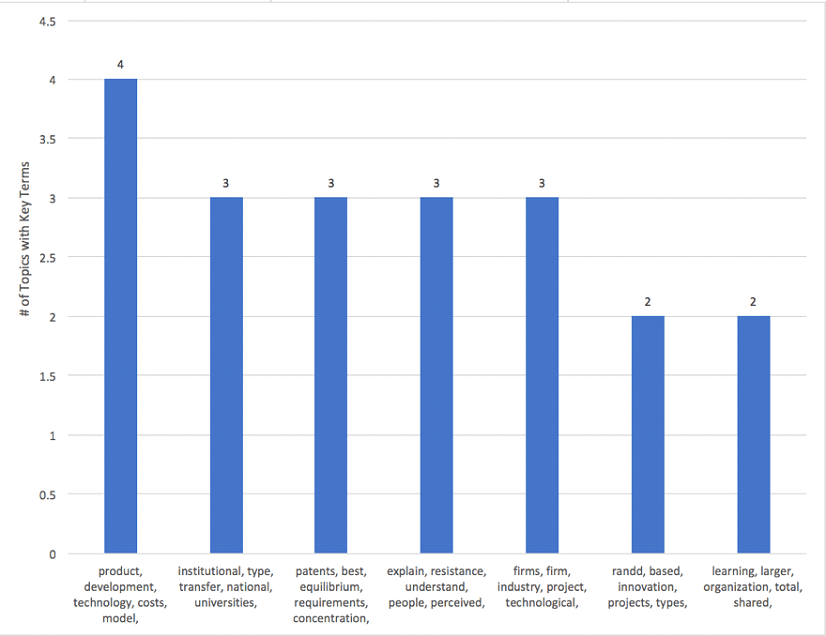

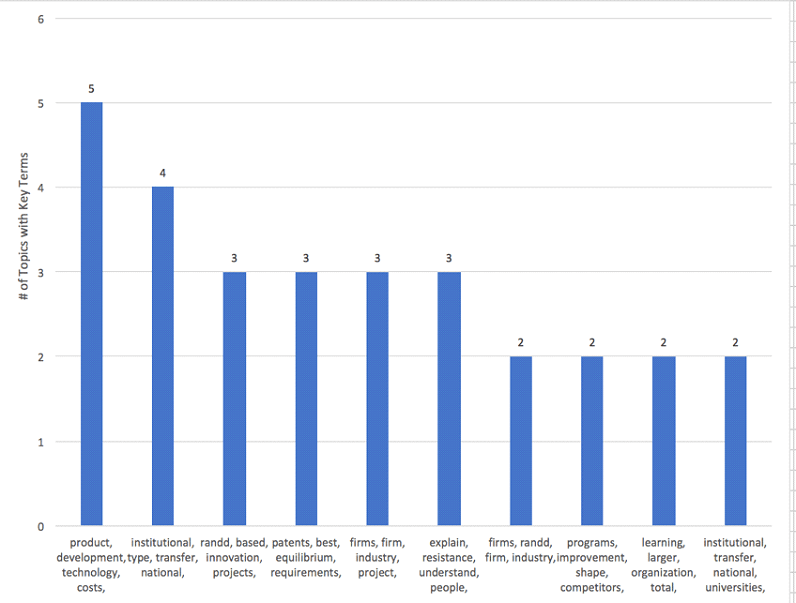

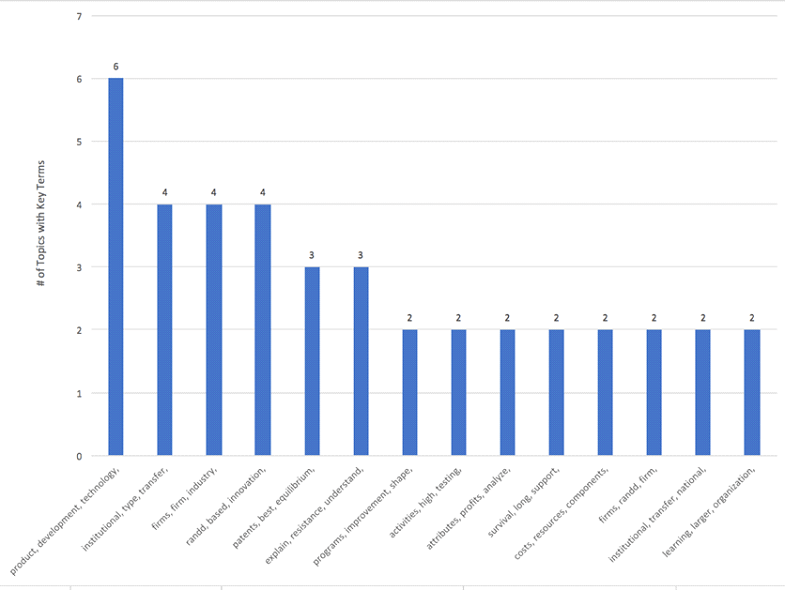

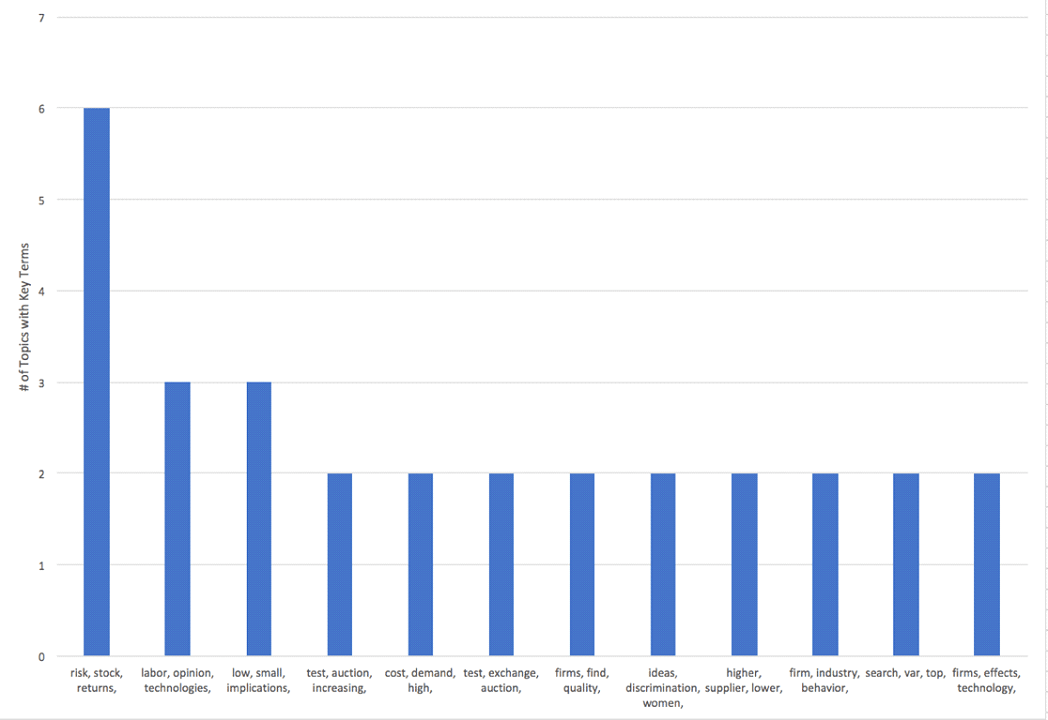

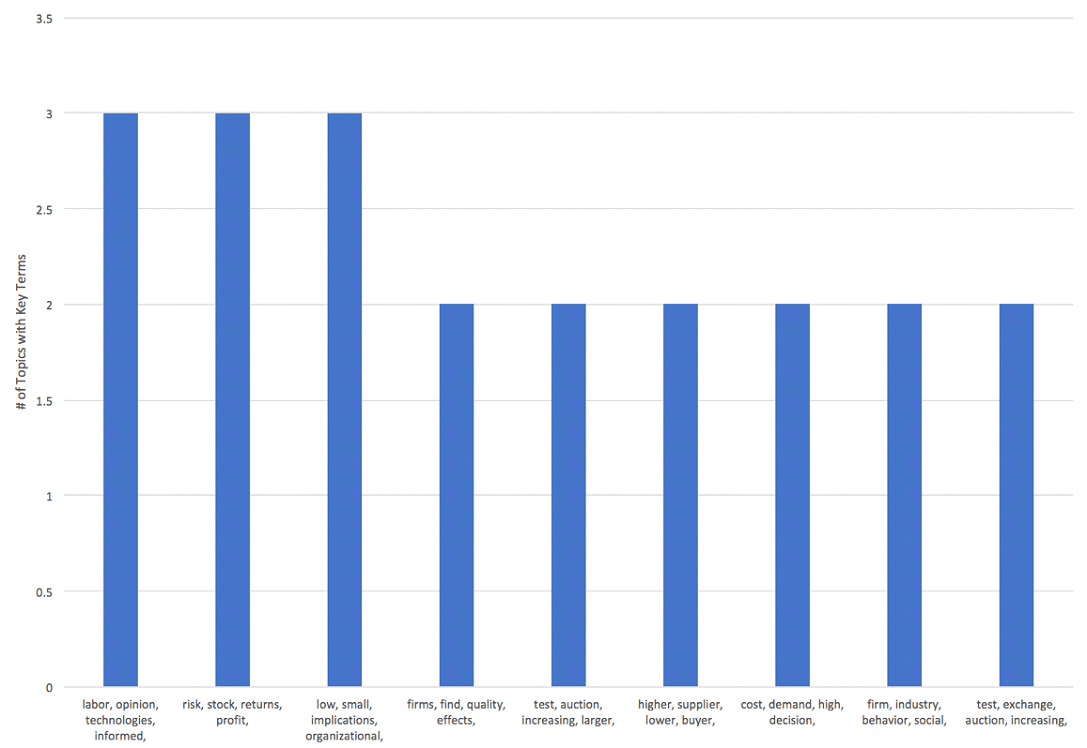

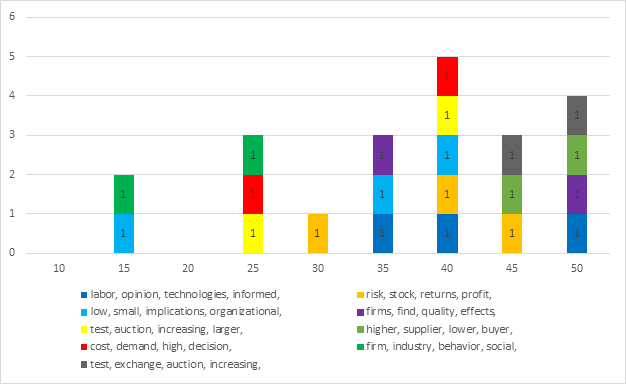

Figure 4-9 – 1954-2004 Topics with five (5) identical headwords

Figure 4-10 – 1954-2004 Topics with four (4) identical headwords

Figure 4-11 – 1954-2004 Topics with three (3) identical headwords

Analysis: Once the scope is limited to three headwords, nuanced changes begins to occur (ex. institutional, type, transfer vs. institutional, transfer, national). It is reasonable to conclude that there will be several topics in the final model that are relatively stable. The final model should include all the following topics: Product Development, Institutional Transfer, R&D / Innovation, Patents, Explaining Resistance / Understanding People, Firms / Projects, and Organizational Learning.

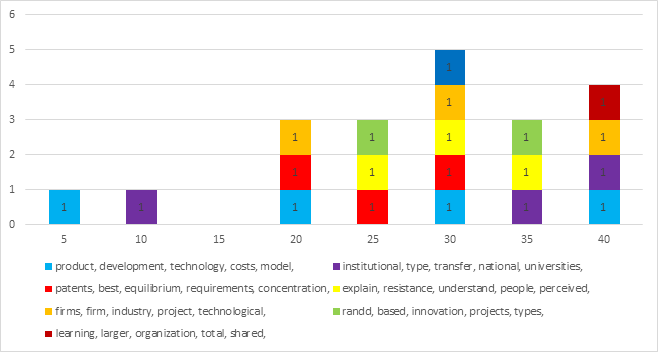

Figure 4-12 – 1954-2004 Stable Topics Per Topic Model

In reviewing the topic models, Topic Model 30 has all the above topics and contains the most recurring topics. Topic Model 30 was selected for further review.

Labeling Topics (Initial Interpretation)

Topic Headwords

The initial results from the above analysis narrowed the scope of the topic models and identified several key topics that should appear in the final model. The topic model was further reviewed to determine if they could be labeled in a manner that is easily understood using the first few words. Labeling based on the first few terms or “topic headwords” has been identified as appropriate by multiple authors (Jockers, 2013; Mathew et al., 2017). While this approach is appropriate for topic models with many topics (ex. Jockers had > 500 topics), our objective was to ensure that all topics could be labeled in a manner that is helpful to a reader that has minimal knowledge of the area.

Steps: The process to generate topic headwords were:

⦁ Organized the topics in terms of topic weight from highest to lowest (top to bottom).Inserted column to identify rank and number accordingly (see Table 4-1, below).

⦁ Used the TRIM formula in Excel to show only the first 2-4 words for each topic labeled as “High” or “Medium” coherence.

⦁ Reviewed automatically generated labels. Adjusted for ease of reading (as required).

Output: The following table was generated:

| Topic # | Rank | Keywords | Generated Label |

|---|---|---|---|

| 20 | 1 | randd, based, innovation, projects, process, types, cost, organizations, multiple, decision | randd, |

| 26 | 2 | product, development, technology, costs, model, resources, time, market, variety, basic | product, development, |

| 28 | 3 | firms, firm, industry, project, technological, production, lead, management, implications, benefits | firms, firm, industry, project |

| 30 | 4 | activities, high, testing, concentrated, technical, customer, managers, findings, form, economic | activities, high, testing, |

| 6 | 5 | performance, design, find, differences, insights, extent, existing, respect, simulation, alternate | performance, design, |

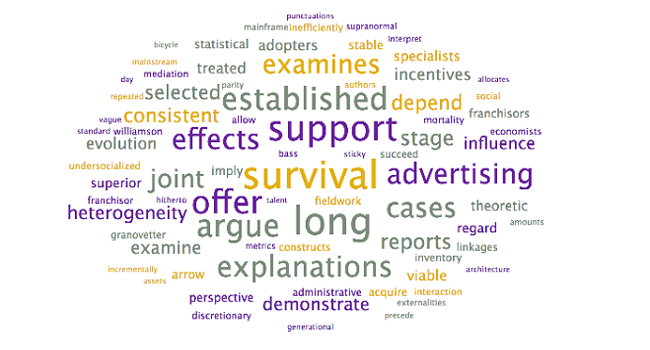

| 7 | 6 | survival, long, support, argue, offer, established, cases, advertising, explanations, effects | survival, long, |

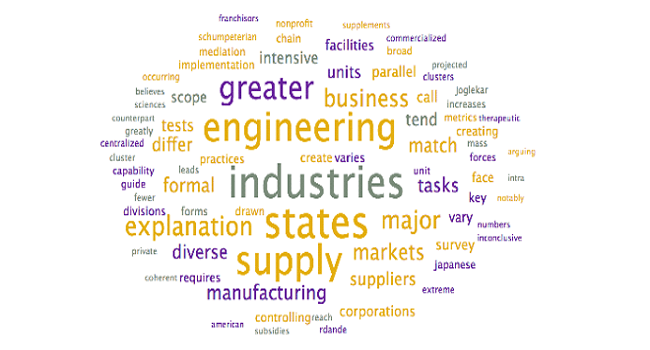

| 17 | 7 | industries, states, engineering, supply, greater, explanation, major, business, markets, manufacturing | industries, states, engineering, |

| 12 | 8 | attributes, profits, analyze, segments, determine, investigation, internal, customers, configurations, price | attributes, profits, analyze, |

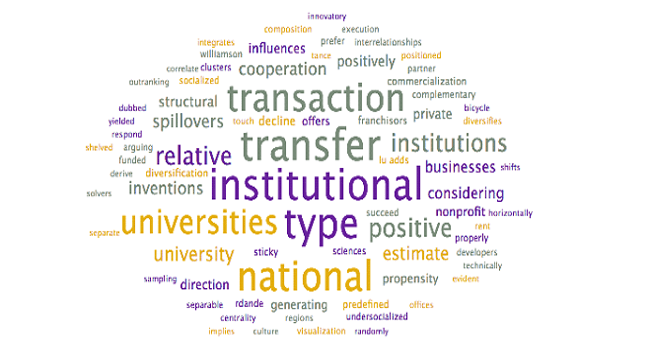

| 19 | 9 | institutional, type, transfer, national, transaction, universities, relative, positive, institutions, university | institutional, type, transfer, |

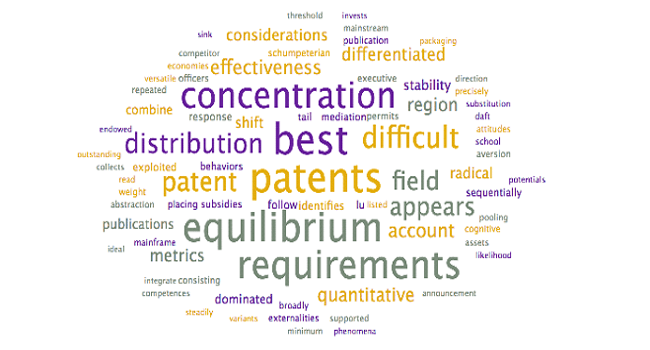

| 23 | 10 | patents, best, equilibrium, requirements, concentration, distribution, difficult, patent, field, appears | patents, best, |

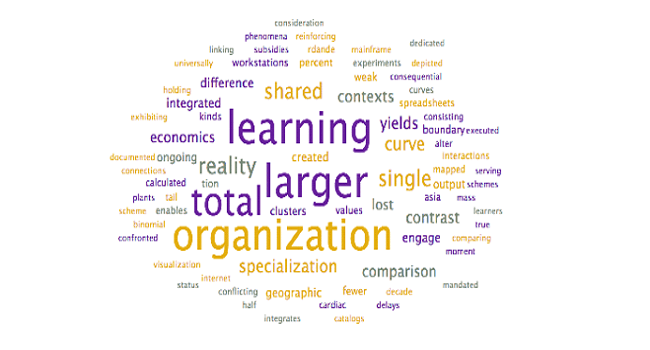

| 16 | 11 | learning, larger, organization, total, shared, reality, single, curve, specialization, contrast | learning, larger, organization, |

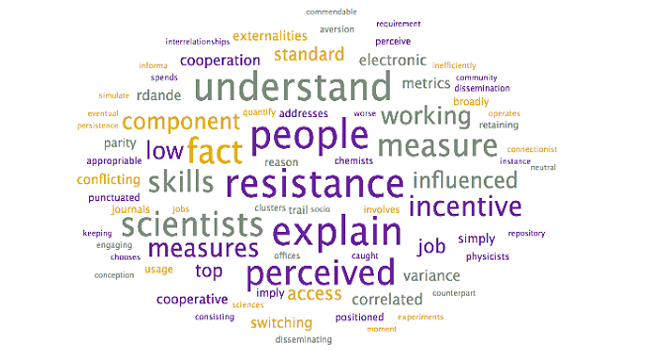

| 22 | 12 | explain, resistance, understand, people, perceived, scientists, fact, skills, measure, incentive | explain, resistance, understand, people |

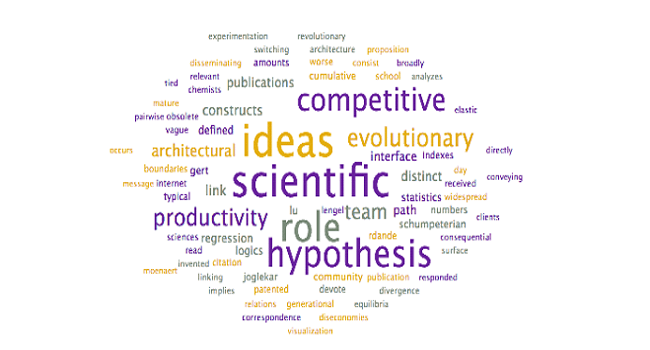

| 11 | 13 | scientific, ideas, hypothesis, role, competitive, evolutionary, productivity, team, target, architectural | scientific, ideas, |

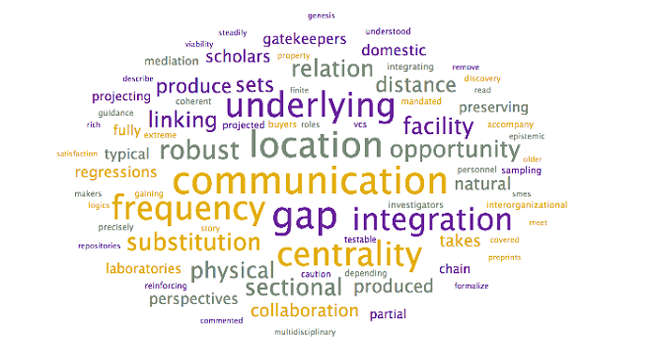

| 13 | 14 | communication, location, gap, frequency, underlying, centrality, integration, robust, relation, opportunity | communication, |

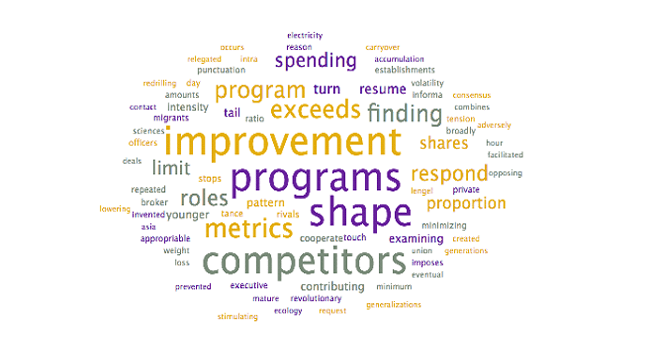

| 25 | 18 | programs, improvement, shape, competitors, metrics, program, exceeds, finding, roles, respond | programs, improvement, |

| Original Topic Label | Label (Generated in Excel) | Label (Human Readable) |

|---|---|---|

| 20 | randd, | R&D [randd] |

| 26 | product, development, | Product Development |

| 28 | firms, | Firm Projects |

| 30 | activities, high, testing, | Testing Activities |

| 6 | performance, design, | Performance / Design |

| 7 | survival, | Survival |

| 17 | industries, states, engineering, | Engineering |

| 12 | attributes, | Attributes |

| 19 | institutional, type, transfer, | Institutional Transfer |

| 23 | patents, | Patents |

| 16 | learning, larger, organization, | Organizational Learning |

| 22 | explain, resistance, | Explaining Resistance |

| 11 | scientific, ideas, hypothesis, | Scientific Ideas |

| 13 | communication, | Communication |

| 25 | programs, improvement, | Improvement Programs |

Analysis: The above labels appear reasonable when reviewed in table format; however, some of the terms have little meaning when viewed in isolation or as part of a ten (10) word summary. What is meant by Survival, Firm Project, Attributes or Improvement Programs? There is insufficient context to determine what is meant by some of these terms.

Next Steps: Additional review is required for identified topic labels, using additional heuristics (word clouds, titles/abstracts).

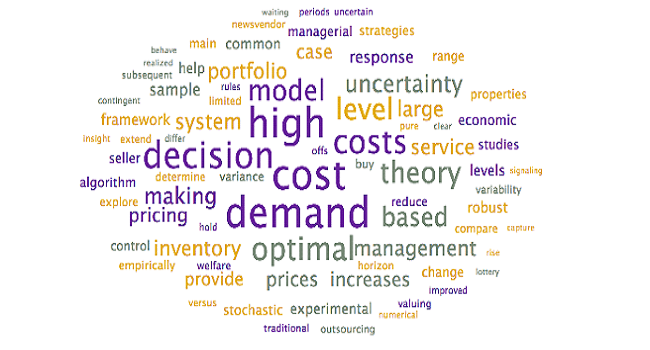

Word Clouds

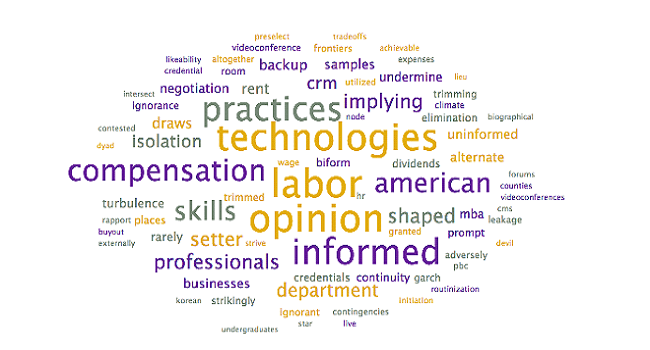

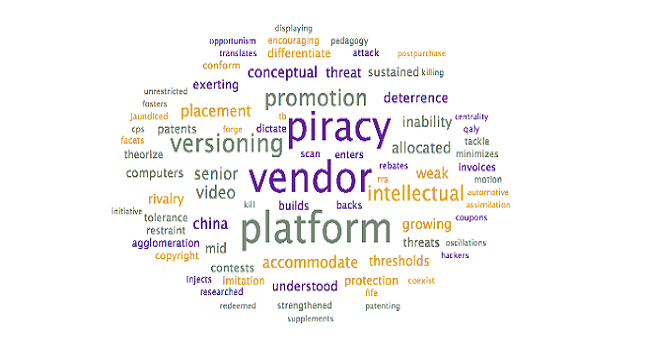

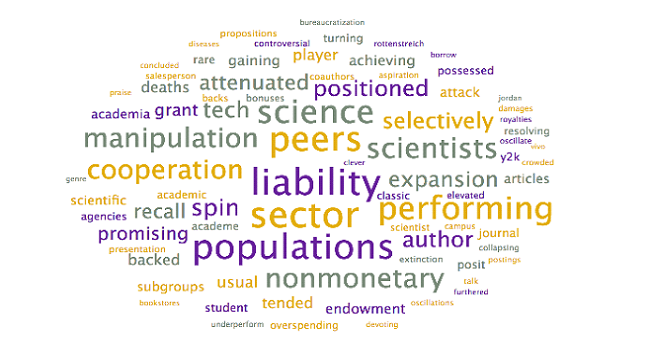

To assist with the interpretation and verification of each of these topics, word clouds were generated to see if additional context could be provided. Specifically, the word clouds provide context for the headwords, allowing researchers to differentiate between similar terms (ex. words in the context allow researchers to disambiguate “market” as in “selling into a market” – other words may include customer, demand – from “stock market” – other words may include stock, valuation, option).

Steps: To generate word clouds, each topic was selected and the word cloud is saved (during the initial topic model generation step, as above). Reviewed the word clouds associated with each topic to determine if the topic labels can be further refined, based on context. Larger terms in the word clouds have higher weights within the topic and appear more frequently

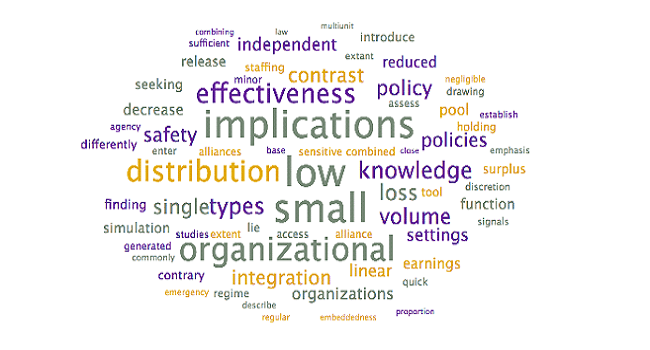

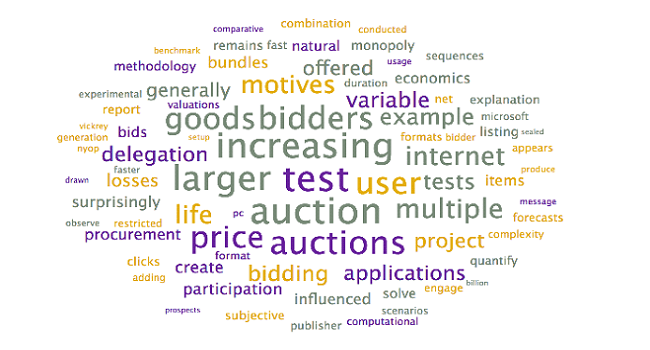

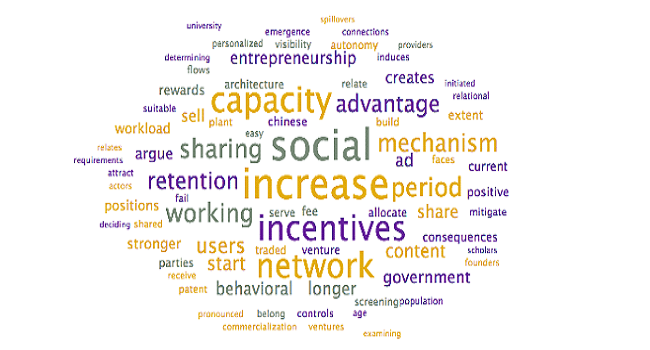

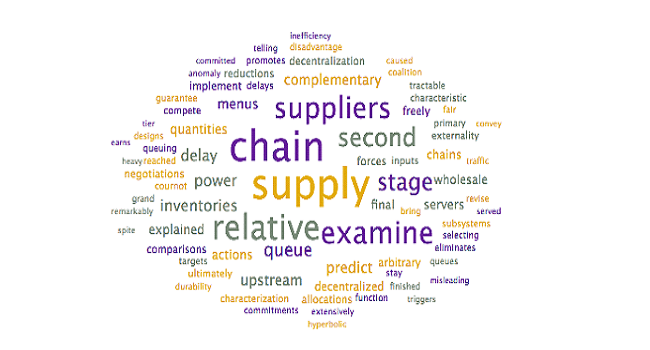

Output: The following word clouds were generated:

Analysis: The above word clouds help confirm the selected topic labels for the topics which were already clear, with one exception:

⦁ Scientific Ideas. This topic appears to discuss the evolution of scientific ideas, including the generation of hypotheses and the role it plays with productivity. It’s unclear from the word cloud exactly what the title should be, as the scientific ideas label may not be accurate. There may be a more appropriate way of Labeling this topic, such as Evolution of Ideas.

The word clouds provided only minimal additional insight for the topics that are unclear:

⦁ Survival. The words that are in the word cloud are more descriptive in nature, with a focus on explanatory terms (ex. examines, established, explanation). A suggested alternative title could be Explaining Survival; however, this still does not answer the question “Survival of what?” It cannot be established if this is survival of firms or ideas without further review.

⦁ Firm Projects. After the terms “firm” and “firms”, the focus is on the terms: technological, projects, production, and industry. A suggested alternative title could be Technological Projects and Production in Firms; however, due to the length, additional review is suggested.

⦁ Attributes. Other key terms identified by the word cloud include analyze and profits. It is inferred that this topic is in relation to identifying and analyzing attributes related to increasing profits. This is further supported by terms such as segments, gatekeepers, and customers – terms typically associated with generating income. A suggested alternative title could be Customer Attributes; however, additional review is required.

⦁ Improvement Programs. The word cloud suggests that this topic discusses the use of improvement programs to stay competitive. A new label is not suggested.

The following adjustments were made to the topic labels:

| Label (Human Readable) | Label (Word-Clouds) |

|---|---|

| R&D [randd] | R&D [randd] |

| Product Development | Product Development |

| Firm Projects | Technological Projects and Production in Firms |

| Testing Activities | Testing Activities |

| Performance / Design | Performance / Design |

| Survival | Explaining Survival |

| Engineering | Engineering |

| Attributes | Customer Attributes |

| Institutional Transfer | Institutional Transfer |

| Patents | Patents |

| Organizational Learning | Organizational Learning |

| Explaining Resistance | Explaining Resistance |

| Scientific Ideas | Evolution of Ideas |

| Communication | Communication |

| Improvement Programs | Improvement Programs |

Next Steps: Review abstracts and titles for additional context.

Review of Abstracts and Titles

A final verification is to review the abstracts and titles associated with each topic, to determine if there are more appropriate labels and whether they have been classified correctly. For each topic in a document, LDA produces a weight of that topic in the document, which approximately corresponds to the percentage of the document about that topic (Rader & Wash, 2015). This can be used to identify the primary and secondary topics present in a document and help the researcher identify which articles to review.

Steps:

⦁ In the sheet for the selected topic model, inserted two new columns: Highest Weight and Second Highest Weight.

⦁ Used the INDEX function of Excel to identify the topic with the highest weight across all topics in the topic model. =INDEX($A$1:$AN$1,0,MATCH(LARGE($A2:$AN2,1),$A2:$AN2,0))

⦁ Used the INDEX function of excel to identify the topic with the second-highest weight across all topics in the topic model.

=INDEX($A$1:$AN$1,0,MATCH(LARGE($A2:$AN2,2),$A2:$AN2,0))

⦁ Generated a pivot table that identifies the number of articles associated with the highest topic for each article;

⦁ In the original topic model spreadsheet, used the Sort & Filter functionality to identify highest-weighted articles in each topic.

⦁ Review titles & abstracts for articles for top ~10% of highest weighted articles for each topic (more if the count was less than 10 articles).

⦁ Adjust topic labels as required.

Output: The following table was generated to determine the overall number of articles associated with each topic:

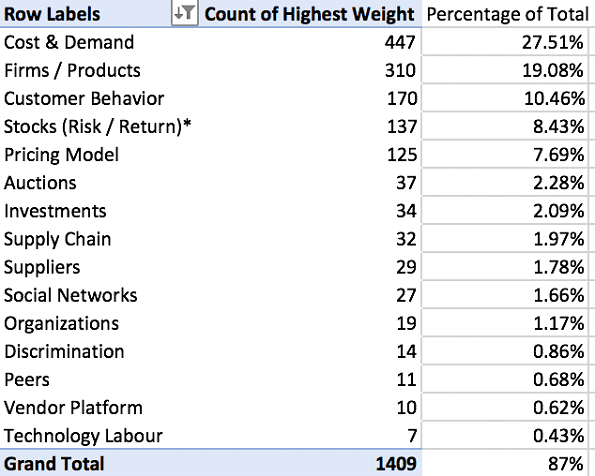

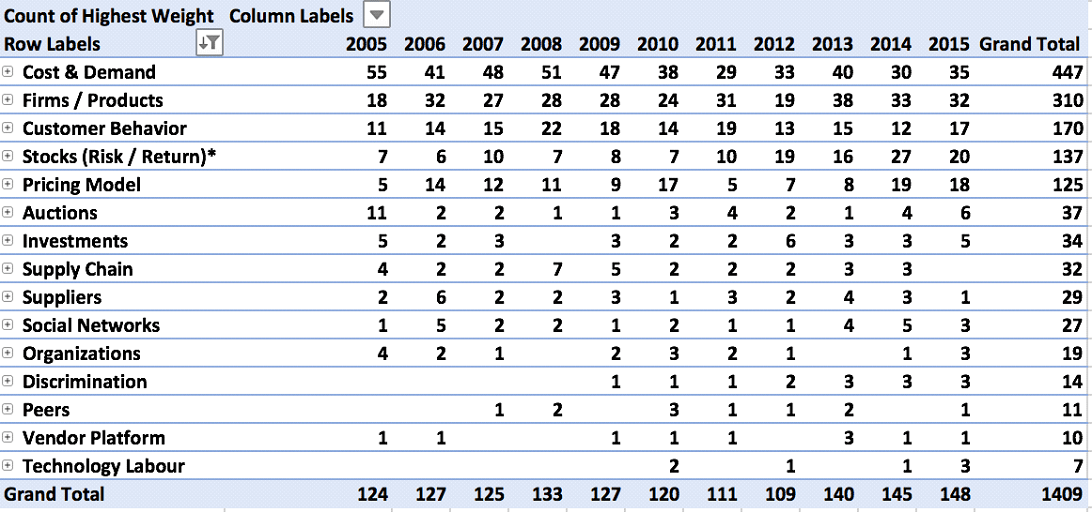

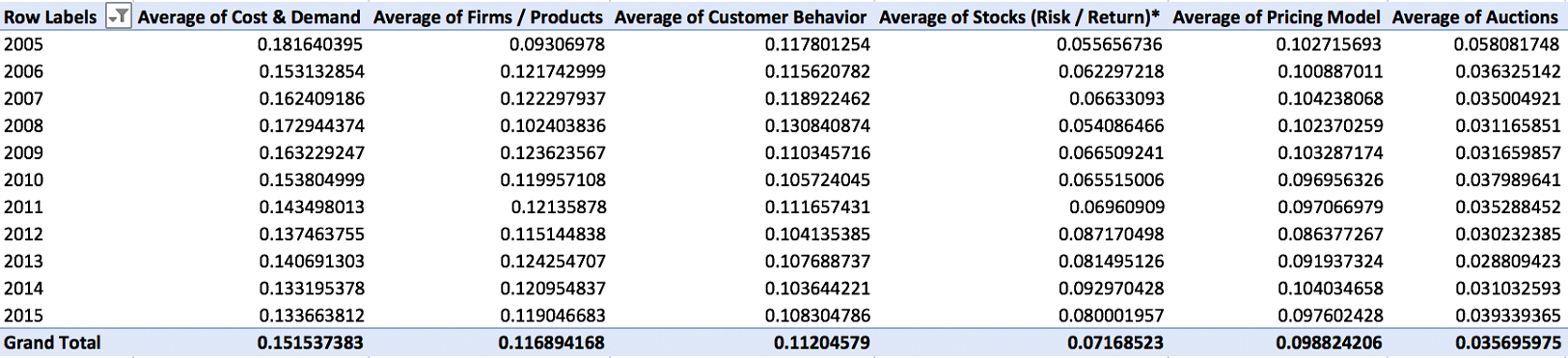

| Row Labels | Count of Highest | Percentage of Total |

|---|---|---|

| R&D | 65 | 31% |

| Product Development | 65 | 31% |

| Technological Projects and Production in Firms | 23 | 11% |

| Explaining Survival | 7 | 3% |

| Performance / Design | 6 | 3% |

| Institutional Transfer | 6 | 3% |

| Customer Attributes | 65 | 31% |

| R&D | 6 | 3% |

| Organizational Learning | 6 | 3% |

| Testing Activities | 65 | 31% |

| R&D | 5 | 2% |

| Evolution of Ideas | 5 | 2% |

| Communication | 4 | 2% |

| Engineering | 3 | 1% |

| Patents | 3 | 1% |

| Explaining Resistance | 3 | 1% |

| Improvement Programs | 2 | 1% |

| Grand Total | 209 | 84% |

R&D was identified as the primary topic in 65 of the articles, representing over 31% of the articles in Corpus A. Similarly, Product Development represents over 31% of the articles in Corpus A and was identified as the primary topic for 65 of the articles. The combined total of these two categories is in excess of 62% of the articles in the journal. This is expected, as the articles selected by Shane and Ulrich (2004) focused on research and development, innovation, product development, and entrepreneurship. To ensure the articles are labeled correctly, the second highest weight topics should be reviewed for both R&D and Product Development.

It was determined that when sorted by topic weight, the topic Communication included several articles that the model has identified as being associated with R&D and Product Development (Highest Weight) in addition to Communication (Second Highest Weight). Upon review of the titles and abstracts for these articles, it became apparent that the articles with a weight of 0.25 or more discuss communication. As such, they were manually adjusted to be associated with Communication.

| Topic Weight (Communication) | Highest Weight | Second Highest Weight | Manual Allocation |

|---|---|---|---|

| 0.386892945 | Communication | R&D | Communication |

| 0.31864199 | Communication | Firms | Communication |

| 0.287499875 | Communication | R&D | Communication |

| 0.274573684 | R&D | Communication | Communication |

| 0.25113526 | R&D | Communication | Communication |

When the same process was repeated across all topics, it resulted in the following new distribution of articles per topic:

| Topic | Manual Allocation | Percentage of Total |

|---|---|---|

| Product Development | 58 | 28% |

| R&D | 58 | 28% |

| Technological Projects and Production in Firms | 24 | 11% |

| Customer Attributes | 8 | 4% |

| Performance / Design | 8 | 4% |

| Explaining Survival | 8 | 4% |

| Patents | 6 | 3% |

| Evolution of Ideas | 6 | 3% |

| Institutional Transfer | 6 | 3% |

| Communication | 6 | 3% |

| Organizational Learning | 6 | 3% |

| Explaining Resistance | 4 | 2% |

| Engineering | 5 | 2% |

| Testing Activities | 4 | 2% |

| Improvement Programs | 2 | 1% |

Analysis: After reviewing the titles and abstracts, the following was observed:

⦁ Communication. The top five articles associated with this topic (>0.25 weight) focus on how communication impacts R&D, with one article using terms the topic modeling system appears to have identified as being synonymous with communication (“interacting process”).

⦁ Customer Attributes. This group of journal articles discusses customer segments and managerial decisions. It is better labeled as Decision Making, as this applies equally to both customer segmentation and managerial decisions.

⦁ Technological Projects and Production in Firms. This group of journal articles discusses how research projects are selected and resources allocated, with resources being either internal, governmental, or venture capital funds. A more appropriate title would be Resource Allocation.

⦁ Explaining Survival. This group of journal articles discusses common survival techniques – including advertising, diffusion of innovation, and contracting – as they apply to both startups and established companies. A better label for this topic would be Survival Techniques.

⦁ Testing Activities. This group of journal articles predominantly refers to using lead users to test and develop concepts. A suggested alternative title is Lead Users.

⦁ Note: Two outlier articles were identified and reallocated to their second-highest weighted topics as they did not fit the overall patterns: Computational Experience with Variants of the Balas Algorithm Applied to the Selection of randd Projects (0.33) and Entrepreneurial Ability, Venture Investments, and Risk Sharing (0.28).

⦁ Patents. The review of this group of articles identified that this is a cohesive topic that discusses patents. Interestingly, the top-weighted article (0.47) does not have patents as the focus of the article – they are merely the dataset used by the authors for a study in relation to a separate research question (Technology Firms and New Firm Formation).

⦁ Note: One article that focused on patents (Patents and Innovation: An Empirical Study) had a relatively low topic weight in relation to Patents (0.15). Instead, the topic model assigned this article to Product Development (0.20) and R&D (0.18).

⦁ Explaining Resistance. This is a cohesive topic that discusses resistance to adopting innovation within a firm.

⦁ Note: There are two outlier articles in this topic, including the article with the highest weight in this topic (0.35). The article – Commercializing Knowledge: University Science, Knowledge Capture, and Firm Performance in Biotechnology – may be better allocated to Technology Transfer, but is weighted extremely low (0.09) by the topic modeling software. This article has been left as being allocated to Explaining Resistance to avoid unnecessary manual intervention, as it could not be allocated to their second-highest weighted topics.

⦁ Improvement Programs. A review of the top three articles associated with this topic identified no clear topic (State-Level Efforts to Transfer Manufacturing Technology: A Survey of Programs and Practices, A Nonsequential randd Search Model, CEO Characteristics and Firm randd Spending.) Due to the low value and lack of cohesiveness in the articles, this was removed as a topic from the model.

With the removal of Improvement Programs, a total of 207 articles are classified using this topic model (84% of the original 248 in Corpus A). The following adjustments are made to the topic labels:

| Label (Human Readable) | Label (Word-Clouds) | Updated Label (Title/Abstract Review) |

|---|---|---|

| R&D [randd] | R&D | R&D |

| Product Development | Product Development | Product Development |

| Firm Projects | Technological Projects and Production in Firms | Resource Allocation for R&D |

| Testing Activities | Testing Activities | Lead Users |

| Performance / Design | Performance / Design | Design Performance |

| Survival | Explaining Survival | Survival Techniques |

| Engineering | Engineering | Organizational Structure |

| Attributes | Customer Attributes | Decision Making |

| Institutional Transfer | Institutional Transfer | Technology Transfer (Universities) |

| Patents | Patents | Patents |

| Organizational Learning | Organizational Learning | Organizational Learning |

| Explaining Resistance | Explaining Resistance | Explaining Resistance (Individuals) |

| Scientific Ideas | Evolution of Ideas | Evolution of Ideas |

| Communication | Communication | Communication |

| Improvement Programs | [Removed.] | [Removed.] |

Final Topic Model: Description and Visualization

After selection and verification of the model, the final list of topics and their interpretation are described in Table 4-9, below.

| Topic | # of Articles | Years Covered | % of Total | Description |

|---|---|---|---|---|

| Product Development | 58 | 1964-2004 | 28% | The articles associated with this topic discuss product development and associated considerations in the management science. This includes timing for purchasing new products, market timing / entry decision, diffusion theories, development cycles, and associated models |

| R&D | 58 | 1964-2002 | 28% | The articles discuss R&D and innovation. These include innovation adoption, budget allocation to innovation, discussion of R&D models, etc. |

| Resource Allocation for R&D | 24 | 1968-2003 | 11% | This topic discusses the allocation of resources for R&D, with a focus on public external funding (incl. federal policies, government seed money, subsidies/entry taxes), private external funding (seed, venture capitalists), and internal funding through product life cycles (product selection choices, R&D models, resource allocation) |

| Decision Making | 8 | 1980-2002 | 4% | This topic focuses on measuring decision making, as it relates to user segmentation and managerial decisions. It addresses a broad range of industries, including software and green product development. Most of the articles are published in the 1980s. |

| Design Performance | 8 | 1977-1998 | 4% | This topic discusses measuring product design performance models, looking at heuristics for evaluating optimal product design models. Timing of activities (concurrent, sequential) is discussed |

| Survival Techniques | 8 | 1978-2002 | 4% | This topic discusses what actions must be taken to ensure the survival of a firm. Articles discuss the influence of advertising on product diffusion, the role of contracting in firm survival, and information asymmetry in startups. |

| Patents | 6 | 1984-2002 | 3% | This articles in this topic are mixed – they discuss the role of patents as well as the adoption of new technologies. |

| Evolution of Ideas | 6 | 1978-2003 | 3% | The articles associated with this topic discuss how ideas evolve within an organization, with a focus on knowledge within the firm. This includes “tacit knowledge and cumulative learning” and the generation of ideas. |

| Technology Transfer (Universities) | 6 | 1992-2003 | 3% | This is a particularly cohesive topic – focus is on technology transfer between universities and firms. For the six articles, institutional technology transfer or knowledge transfer are explicitly described in the titles, with a focus on technology licensing in the early 2000s (4 of 6 articles). |

| Communication | 6 | 1973-1998 | 3% | These articles focus on how communication impacts R&D, with one article using terms the topic modeling system appears to have identified as being synonymous with communication (“interacting process”) (Effectiveness of Nominal and Interacting Group Decision Processes for Integrating randd and Marketing). |

| Organizational Learning | 6 | 1975-2003 | 3% | This topic discusses learning within an organization, with a specific focus on the learning curve (62%). Of the eight articles associated with this topic, two appear in the 1970s while the rest are published between 1990 and 2003, with those associated with the concept of a “learning curve” being published between 1990-2001. |

| Explaining Resistance (Individuals) | 4 | 1988-2002 | 2% | This topic relates to the acceptance of new technology by different individuals, with an emphasis on software adoption by managers. |

| Organizational Structure | 5 | 1983-2002 | 2% | This topic discusses the effect of organizational structure and the allocation of firm resources (human capital), with a particular emphasis on business units. |

| Lead Users | 4 | 1988-2002 | 2% | This grouping of articles discusses the shifting of innovation to users (“lead users”) through market research and toolkits, in addition to testing of new product concepts. |

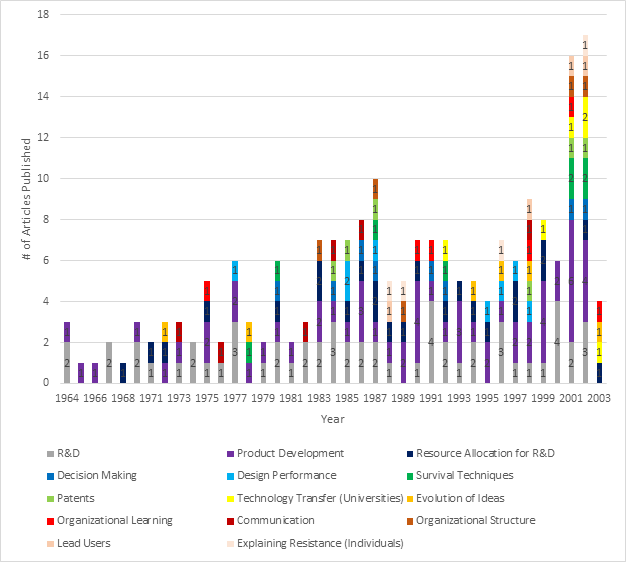

Distribution of Articles Across Topics by Year

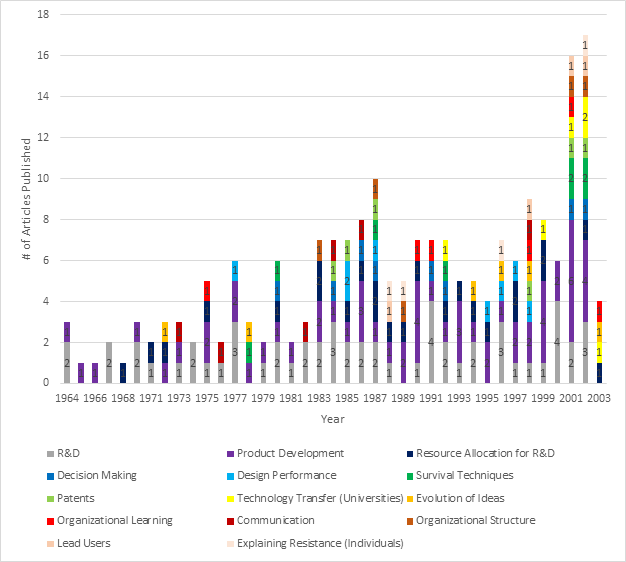

The following table identifies the number of articles published per topic, per year:

Figure 4-13 – 1954-2004 Distribution of Articles Across Topics By Year

Starting in 1970, the publication of articles related to R&D remains relatively consistent; however, in the early 1960s there are several years without any publications associated with R&D. Table 4-13 shows the increase in interest in Product Development particularly in the late 1990s and early 2000s, as well as the appearance of Technology Transfer (Universities) in the early 2000s. The publication of articles related to other topics varies based on this chart; additional insights are likely to be generated through the graph identifying topics over time (Figure 4-14).

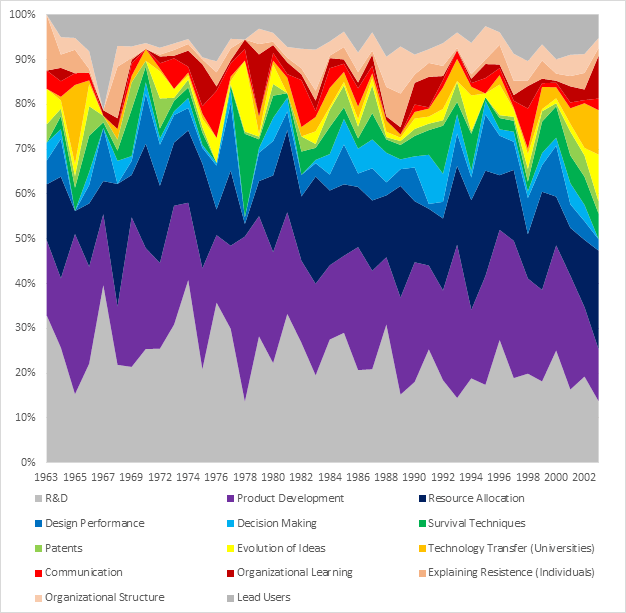

Evolution of Topics over Time

Figure 4-14 – 1954-2004 Average Topic Weights By Year

At a glance, there is considerable variation in terms of the overall percentage of any given topic (between 6% and 25%). The topics with the most articles (Product Development, R&D, and Resource Allocation for R&D) show the widest range over the 50-year period: Product Development varies by 25% (minimum 9%, maximum 34%), followed by Research & Development (minimum 11%, maximum 33%) and Resource Allocation for R&D (minimum 2%, maximum 26%). All other topics fluctuate between 0-17% over the same reporting period. This can be explained by examining the time period and sample size: each of the remaining topics have 8 articles or less published over a 50-year period. As such, each article published has a larger impact on the popularity of the topic.

Comparison to Expert Review (Shane and Ulrich, 2004)

Prior to proceeding to the analysis of Corpus B, the results of the topic modeling process for Corpus A will be compared to those of an expert review. The methodology of Shane and Ulrich (2004) is as follows:

⦁ Reviewed all scholarly articles published in Management Science from 1954 to 2004;

⦁ Identified articles that fall within the domain of the current department of Research and Development, Innovation, Product Development, and Entrepreneurship;

⦁ Scanned titles and abstracts of every article published in the journal for the following keywords: entrepreneur, entrepreneurship, venture, product development, product design, technological innovation, and research and development (R&D);

⦁ Scanned abstracts “to see if the articles fit the domain of our department without using a relevant key word”;

⦁ Excluded articles focused narrowly on information technologies (Information Systems department is considered separate) as well as notes, book reviews, and other short pieces; and

⦁ Generated a list of articles for review (250).

The tables produced by Shane and Ulrich (2004) are identified in the left-hand column of Table 4-10. The results that can be replicated or extended using topic modeling are identified in the right-hand column. Not all the tables generated by Shane and Ulrich can be easily replicated using common and/or open-source topic-modeling software. Below is a comparison of what Shane and Ulrich (2004) generated as compared to what could be generated with the available data and topic modeling tools:

| Table | Shane and Ulrich (2004) | Topic Modeling (Orange/Excel) |

|---|---|---|

| 1 | List of themes and subthemes related to innovation, whether applied to products, technologies, or firms. It includes the creation of products, the commercialization of new technologies, and the birth of new companies. For the themes with substantial prior research or with an established academic structure, identify several subthemes. | List of topics generated utilizing the titles and abstracts of the articles, using topic modeling (LDA) (Table 4-9). |

| 2 | The number of articles published in the field of innovation in each five-year period since the inception of the journal, along with the percentage this number represents of the total number of articles published by the journal overall. | While this would have been possible to complete with a complete data set, the Web of Science database was missing eight years of data. Manual updating is recommended for future research. |

| 3 | Identify the distribution of articles across themes by decade. Identify which themes are more important now than they were when Management Science began and which have become less important. | Identify the distribution of articles – and their relative topic weights – across five decades. Identify which themes are more important now (Figure 4-13, Figure 4-14). |

| 4 | The change in the types of papers published. It shows the percentage distribution of papers across conceptual, formal, empirical, and qualitative by decade. | This could be completed by manually identifying which keywords are related to conceptual, formal, empirical or qualitative, and then comparing to the keywords for each topic; however, this cannot be completed “out of the box” using topic modeling software. This is suggested for future work. |

| 5 | Authorship patterns. | It was not possible to identify authorship patterns using topic modeling. |

To ensure similarity in comparing the results, only those topics that represent 90% of the articles were compared to the topic model. Table 4-11 lists the topics identified by Shane and Ulrich (2004) and the number of articles associated with each topic. The topics that represent 90% of Corpus A are identified.

| Topic | # Articles | % of Total Articles | Top 90% |

|---|---|---|---|

| Adoption and Diffusion of Innovation | 32 | 12.9% | Yes |

| Development Process Management | 31 | 12.5% | Yes |

| Product Planning and Portfolios | 31 | 12.5% | Yes |

| Technology Strategy – Behaviour Studies – Economic Studies – Strategy Process | 18 12 5 | 7.3% 4.8% 2.0% | Yes |

| Basic Research and Advanced Development | 14 | 5.6% | Yes |

| Product Design | 12 | 4.8% | Yes |

| Organization Design – Communication – Decision Making – Organizational Structure | 11 10 7 | 4.4% 4.0% 2.8% | Yes |

| Concept Development | 10 | 4.0% | Yes |

| Public Policy – The Impact of Specific Government Policies – Factors that Account for the Rate of Innovation – The Effect of Innovation on Economic Growth – Tools Used by Policy Makers | 9 5 3 2 | 3.6% 2.0% 1.2% 0.8% | Yes |

| Knowledge Transfer – Knowledge Spillovers and Technology Transfer – Learning | 7 6 | 2.8% 2.4% | Yes |

| Entrepreneurship – Decision Making – Strategy and Performance – Financing – Organization Design | 5 5 4 4 | 2.0% 2.0% 1.6% 1.6% | No |

| Concept Development | 5 | 2.0% | No |

The following is a summary of the descriptions provided by Shane and Ulrich (2004):

| Topic | Summary |

|---|---|

| Adoption and Diffusion of Innovation | “The problem of explaining and predicting the adoption and diffusion of innovation (p. 136).” |

| Development Process Management | Focuses on managing product development processes. “Much of this research takes the perspective of a product development process as a collection of tasks with information flows among them. […] Perennial questions include the extent to which dependent tasks should be overlapped and the relative value of lead time and efficiently (p.136).” |

| Product Planning and Portfolios | Research focused on the question of which innovation projects to pursue. “This decision involves both assessing the inherent merit of a particular project and understanding the interactions among projects in determining the overall value of a portfolio of projects (p. 136).” |

| Technology Strategy n | Behaviour Studies. Behavioral explanations for technology strategy. “Several behavioral studies of technology strategy sought to identify the source of firm performance, but have considered a variety of topics including: creating new knowledge, the strategy-environment fit, intraorganizational relationships, and the effects of top management team characteristics (p. 135).” / Economic Studies. Economic-oriented strategy articles with empirical studies and formal models of technology strategy (p. 135). / Strategy Process. A subtheme of technology strategy research (p. 135). |

| Basic Research and Advanced Development | “These are a highly eclectic group of papers, with no critical mass of work emerging on any particular topic. The topics range from time studies of individual scientists to macro-economic models of R&D spending. […] We draw a distinction between R&D as the product-generation function of the firm and basic research and advanced development, which we define as innovative activities not directed at a specific product-development objective (p. 135).” |

| Product Design | “We define product design as the set of decisions that define the product itself. We exclude from this category a very large body of work on consumer-attribute-based design methods, including conjoint analysis. A body of work has germinated around the issues of coordinating product design with production processes, including papers on design for manufacturing, platform planning, and component sharing (p.136).” |

| Organization Design | Communication. “Communication patterns in innovative activity, which was initially internally focused and shifted to consideration of the external boundary of the organization in the 1980s (p. 135).” Decision Making. Decision making about innovation and technology including process orientation to studies of decision making and formal methods (p. 135). Organizational Structure. Explores the effect of organizational structure on innovation. The earliest subtheme explored in the journal receiving “off-and-on attention over the past 50 years, with the addition of new dimensions periodically reviving the theme (p. 135).” |

| Concept Development | “A central problem in product development is which concept to pursue. The concept is the configuration of working principles and elements that make up the product, whether a service, software, or a physical good (p. 136).” |

| Public Policy | The Impact of Specific Government Policies. “The impact of specific government policies on innovation (p. 137).” / Factors that Account for the Rate of Innovation. “The factors that influence the rate of innovation in a locale (p. 137).” / The Effect of Innovation on Economic Growth. “The effect of technological innovation on economic growth (p. 137).” / Tools Used by Policy Makers. “The tools that policy makers use to make decisions about investments in innovation (p. 137).” |

| Knowledge Transfer | Knowledge Spillovers and Technology Transfer. “Knowledge spillovers and technology transfer. Only since 1999 has this theme been important in the journal (p. 136).” Learning. First published in the 1960s and “expanding the approaches toward learning in a variety of ways (p. 136).” |

Initially, the labels of the topic model were compared to the top 90% of the topics (and subtopics) identified by Shane and Ulrich. The decision to map the topics from the LDA topic model to both topics and subtopics was due to the identification of an alignment between 33% (6/18) of the topics and subtopics (see Table 4-13). In several instances, it was evident that mapping to the topic would be less precise than mapping to the subtopic. The following table was generated:

| Shane and Ulrich (2004) | Topic Model (Orange / Excel) |

|---|---|

| Basic Research and Advanced Development | R&D. |

| Organization Design. Communication. | Communication. |

| Organization Design. Decision Making. | Decision Making. |

| Organization Design. Organizational Structure. | Organizational Structure. |

| Knowledge Transfer. Knowledge Spillovers and Technology Transfer. | Technology Transfer (Universities). |

| Knowledge Transfer. Learning. | Organizational Learning. |

As noted by Jockers (2013), topic labels are often for convenience and may not capture the complexity of a topic; consequently, the descriptions of the topics (and subtopics) were compared. This produced further alignment (1:1) between three topics (17%) but no subtopics; however, the topics of Concept Development (Shane and Ulrich) and Lead Users (topic model) would be considered a partial match as Lead Users is limited to concept development using lead users.

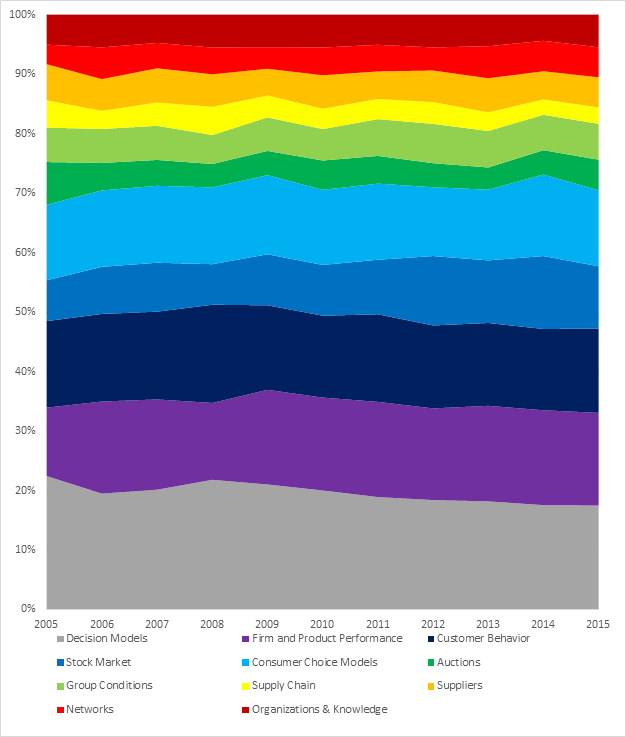

| Development Process Management. Focuses on managing product development processes. “Much of this research takes the perspective of a product development process as a collection of tasks with information flows among them. […] Perennial questions include the extent to which dependent tasks should be overlapped and the relative value of lead time and efficiently (p.136).” | Product Development. The articles associated with this topic discuss product development and associated considerations in the management science. This includes timing for purchasing new products, market timing / entry decision, diffusion theories, development cycles, and associated models. |